I S K O

Encyclopedia of Knowledge Organization

Hypertext

by Riccardo RidiTable of contents:

1. Definition

2. Characteristics, components and typologies

2.1 Characteristics of hypertexts

2.2 Rhizomes and hypotexts

2.3 Graph theory

2.4 Components of hypertexts

2.5 Typologies of hypertexs

2.6 Serendipity

2.7 Browsing

2.8 Orientation in hypertexts

3. History

3.1 The word

3.2 Prehistory

3.3 Memex

3.4 NLS

3.5 Xanadu

3.6 The first generation of hypertext systems

3.7 The second generation of hypertext systems

3.8 World Wide Web

3.9 The third generation of hypertext systems

4. Technological applications

4.1 Multimedia CD-ROMs and DVDs

4.2 Citation indexes

4.3 PageRank and relevance ranking

4.4 OpenURL and reference linking

4.5 Semantic web and linked data

4.6 Social networks

5. Conceptual applications

5.1 Hypertextuality of literature and games

5.2 Hypertextuality of knowledge organization systems

5.3 Hypertextuality of memory institutions and of the universe

6. Conclusions

Acknowledgments

Endnotes

References

ColophonAbstract

Hypertexts are multilinear, granular, interactive, integrable and multimedia documents describable with graph theory and composed of several information units (nodes) interconnected by links that users can freely and indefinitely cover by following a plurality of possible different paths. Hypertexts are particularly widespread in the digital environment, but they existed (and still exist) also in non-digital forms, such as paper encyclopedias and printed academic journals, both consisting of information subunits densely linked between them. This article reviews the definitions, characteristics, components, typologies, history and applications of hypertexts, with particular attention to their theoretical and practical developments from 1945 to present day and to their use for the organization of information and knowledge.

1. Definition

A hypertext is a → document (or a set of documents) composed of several information units (called nodes), connected between them by links chosen a priori by those who produce the document itself (who select them among all logically possible links) and a posteriori by those who read the hypertext, deciding for themselves to cover it by following each time a particular path among the many that have been made possible by the authors or, in some cases, even by creating new ones (Nelson 1965; Nielsen 1990; McKnight, Dillon and Richardson 1992, 226-229; Welsch 1992, 614-616; ISO 2001, 4.3.1.1.19; Léon and Maiocchi 2002, 29-45; Landow 2006, 2-6; Alberani 2008, 147-149; Eisenlauer 2013, 65). “The essential principle of hypertext” is thus “the ability to move without interruption from one information resource to another” (Feather and Sturges 2003, 232) following a plurality of possible paths. This fundamental characteristic of hypertexts – sometimes called also hyperdocuments (Martin 1990; Woodhead 1991, 3) – is often referred to generically as hypertextuality (Cicconi 1999; Oblak 2005) or, more rarely but more specifically as multilinearity (Bolter 2001, 42; Landow 2006, 1), nonlinearity (Aarseth 1994; Blustein and Staveley 2001, 302) or nonsequentiality (Nielsen 1995, 348; Carter 2003).

Some authors restrict the definition of hypertext only to textual documents, preferring the term hypermedia to refer to multimedia hypertextual documents (Prytherch 2005, 332-333; Dong 2007, 234). Others use the term hypertext to refer exclusively to multilinear documents of digital type (Conklin 1987; Kinnell and Franklin 1992; Eisenlauer 2013, 58-59; Wikipedia 2017a) or, even (in non-specialized sources), as a mere synonym for digital document (that is to say any information resource usable with a computer, regardless of its greater or lesser multilinearity). In this article we will rather adopt an extended interpretation of the concept of hypertext, in both the digital and paper environment and independent from the number and the nature of the media involved.

The subject of this article are hypertexts and hypertextuality understood as a modality of organization of knowledge, information and documents. Therefore, we will not discuss other meanings of such terms used in the field of semiotics and literary criticism [1].

2. Characteristics, components and typologies

2.1 Characteristics of hypertexts

The fundamental precondition of hypertextuality is granularity (Zani 2006), that is the property of documents that can be decomposed into smaller self-contained parts still making sense and usable, such as the single entries of an encyclopedia. Indeed, only if a document can be decomposed into many nodes, it will be possible to connect them in many different ways. Eisenlauer (2013, 64) prefers to call this characteristic “fragmentation” and divides it into intranodal and extranodal: “the former refers to the fragmentary text arrangement within one node, while the latter applies to the fragmentation across different nodes”.

Two other relevant characteristics of hypertextuality, in addition to granularity and to multilinearity, are interactivity and integrability. Interactivity (Léon and Maiocchi 2002, 79-81) or malleability is the possibility, for the reader, to creatively modify a document in ways unforeseen by the original author, adding nodes or links. Every hypertext is by definition interactive, at least in the minimal sense of allowing multiple reading paths freely chosen by the reader, but the extent of the creative intervention allowed to the user (which may be more or less radical) and the degree of permanence of the changes made (which may be more or less temporary) are variable.

Integrability (Cicconi 1999, 31-32) means indefinite extensibility, that is to say the property for which, following the links in a hypertext by moving from a node to another, one can reach any point, without ever arriving to any definitive end (or beginning). According to the greater or lesser level of integrability hypertexts can be divided into open (those from which you can “go out”, continuing your own path more or less long towards further hypertexts, as happens on the World Wide Web) and closed (those from which you can not escape, because all links are directed towards the nodes of the same hypertext) (Jakobs 2009, 356; Eisenlauer 2013, 62-63). In this regard Roy Rada (1991a, 22 and 68) distinguishes between “small-volume hypertext or micro text, [i.e.] a single document with explicit links among its components” and “large-volume hypertext or macrotext [that] emphasizes the links that exist among many documents rather than within one document”. Integrability and interactivity are not completely independent of each other, since the only real possibility that a hypertextual system has to be always open to the outside, growing indefinitely, is to rely on the enrichment brought by always new readers and authors.

A last characteristic of hypertextuality is multimediality (Klement and Dostál 2015) or multimodality. This can be a property either of individual nodes — which can be texts in a strict sense, still or moving images, sounds, or a mixture of them — or of the structure of links between them, that can be based on schemes, diagrams, images or other non-textual organizations. These can make the whole structure of an hypertext map-oriented rather than index-oriented, by favouring spatial organization over temporal organization. While the latter is more typical of linear texts based on an ordered sequence, like in lists, when facing an image or a map the readers can freely choose to pay attention to any of its parts, all simultaneously available to their look. For the latter case, then, some prefer to adopt the term hypermediality (Antinucci 1993).

Hypertextuality — decomposable, as we have seen, into multilinearity, granularity, interactivity, integrability and multimediality (Eisenlauer 2013, 63-65) — can be considered not just as a discrete property, either possessed or not by a document, but also as referring to a continuum moving without leaps from a minimum degree to a maximum degree both overall and with respect to each of these characteristics (Fezzi 1994). One can find documents provided with greater multilinearity, like encyclopedias, dictionaries, websites or linked data stores, as well as documents with less multilinearity, like a poem, a song or a movie. Or one can notice that digital documents are, in general, more malleable than the traditional ones, even if a card file is more easily customizable (both with regard to its contents and its arrangement) than any film on DVD with extremely primitive indexes or any e-book editorially “armoured” by a digital right management system that prevents any modification or data extraction. A unilinear document is only a particular case of a very simple multilinear document, just as a textual or audio document is only a particular case of a very simple multimedial document because, from a certain point of view, all documents are hypertexts, more or less rich and complex (Fezzi 1994).

2.2 Rhizomes and hypotexts

Hypertexts should not be confused (like Robinson and McGuire 2010 and Tredinnick 2013 do) with rhizomes (Deleuze and Guattari 1976; Eco 1984, 112; Landow 2006, 58-62; Eco 2007, 59-61; Mazzocchi 2013, 368-369), which constitute the limit case of hypertexts in which each node is mechanically linked to all the other nodes belonging to the same document, without selection by their author (Finnemann 1999, 27), among all the logically possible links, of only those considered to be useful, meaningful or at least sensible. Therefore rhizome is the term that can be used to indicate those hyptertexts (though neither very widespread nor particularly useful) so radically multilinear as to provide links from each node to all other nodes. Similarly, Ridi (1996) proposed the term hypotext (understood in a different way from Genette 1982 [1]) to indicate documents with little hypertextuality and, in particular, those so little multilinear as to be configured as unilinear documents in which each node is linked only to the previous node and to the next one, with the possible exceptions (in non-circular documents) of the first and the last node of the series.

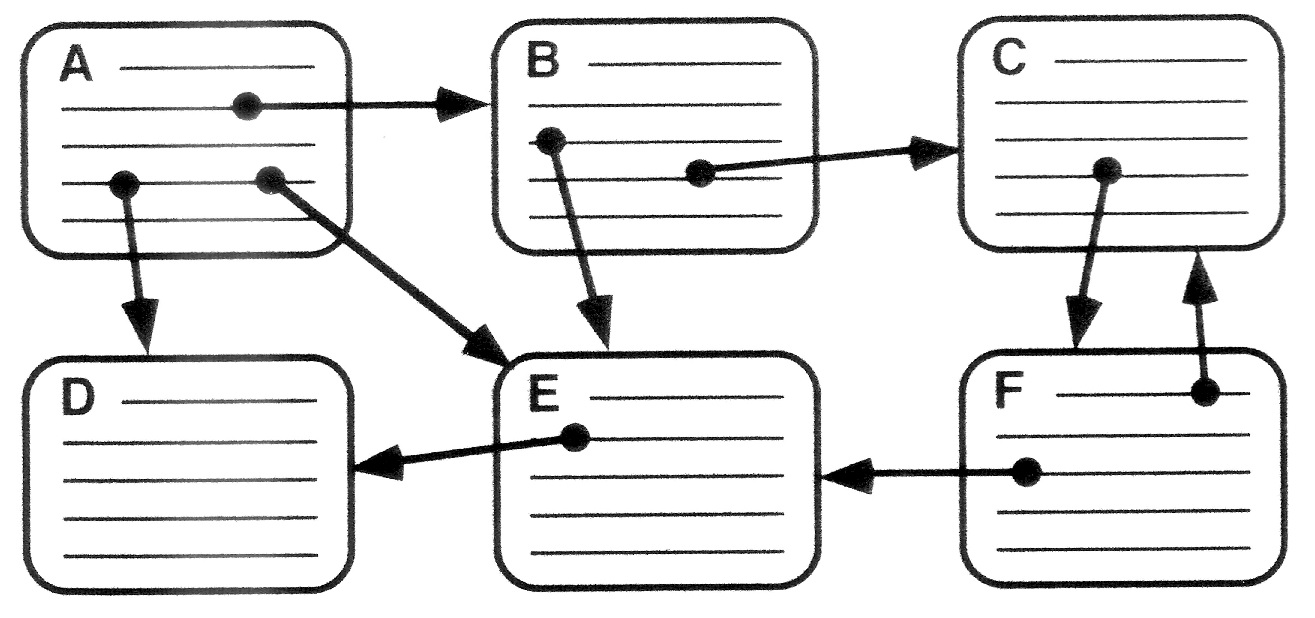

2.3 Graph theory

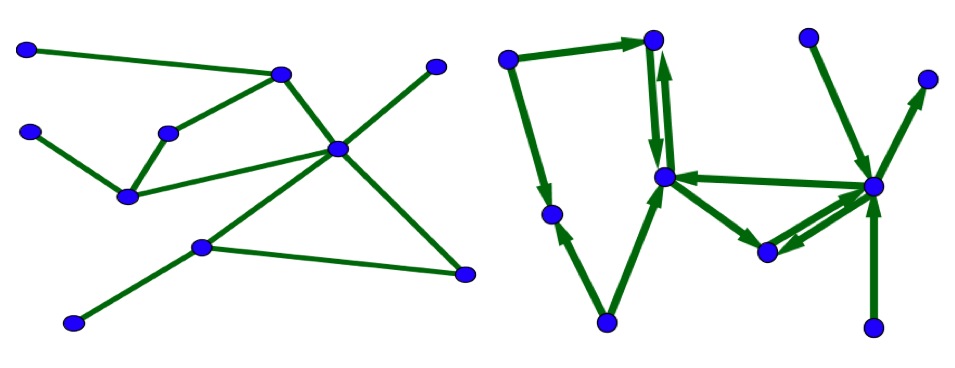

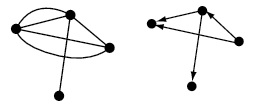

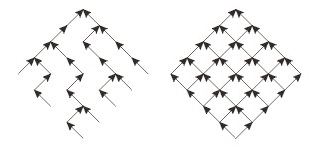

In addition to the limit-forms of the rhizome, of the unilinear document and of the circular document (Bernstein 1998, 22), hypertexts can also take any other shapes and sizes described by graph theory, that is the branch of mathematics which deals with the abstract objects consisting of a set of points (also called vertices or nodes) and of the possible set of lines (also called edges or arcs) that join them. Such a theory, applicable to many areas of reality, including hypertexts, distinguishes between the edges without orientation, which limit themselves to join together two vertices without establishing any particular order between them, and the edges (called arrows) that indicate a specific direction from a vertex to another. Directed graphs (or digraphs) are those in which at least a part of the edges has an orientation, while undirected graphs are composed exclusively of edges without orientation (Rosenstiehl 1979; Rigo 2016; Barabási 2016, 42-70).

Two vertices linked by an edge are said adjacent. A continuous sequence of edges is a path, which is called cycle when, if one follows it, one returns to the initial vertex. The degree (or valency) of a vertex is the number of edges that links it to others or to itself. When the vertices, instead of being abstract mathematical entities, are made of something real and with specific characteristics, sometimes one prefers to call them networks instead of graphs (Newman 2003), while other authors (Nykamp 2016) use these terms interchangeably. Among the main types of graphs are the following (Furner, Ellis and Willett 1996; Van Steen 2010; Sowa 2016; Rigo 2016; Barabási 2016, 42-70; Weisstein 2017):

- simple graphs, in which there are no loops, i.e. edges that connect a vertex to itself and increase its degree of two units. Unless otherwise stated, the unqualified term graph usually refers to a simple graph;

- rooted graphs, in which a specific vertex has been identified as the root of the graph itself;

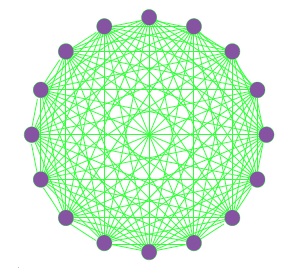

- complete graphs (under which rhizomes can be traced back), in which any pair of vertices is linked by at least one edge;

- multigraphs, in which a pair of vertices may be linked by more than one edge (as it may occur between two web pages, with various reciprocal links aimed at different points of the same pages);

- oriented graphs, that is to say the directed graphs whose pairs of vertices are never mutually linked by a symmetrical pair of arrows;

- trees, that is to say the graphs in which there is a unique path connecting any pair of vertices (and thus there are no cycles);

- lattices (or grids), which are graphs forming regular tilings.

As in a hypertext a link that goes from node A to node B is a different thing from one that goes from node B to node A, hypertexts can be described (Botafogo, Rivlin and Shneiderman 1992; Furner, Ellis and Willett 1996; Mehler, Dehmer and Gleim 2004) as directed graphs (or, better, as directed networks, since they are concrete) whose vertices are constituted by information units, and all the mathematical concepts, properties and formulas of graph theory can be applied to them, including (Rosenstiehl 1979; Van Steen 2010; Rigo 2016):

- connectivity: each pair of vertices of a graph is said connected if there exists at least one path that connects them and each graph devoid of disconnected vertices is said connected. In a connected graph no vertex is unreachable by others, unlike the World Wide Web, where there are isolated pages without even a link from other web pages addressed to them and that can therefore be represented by a disconnected graph. The level of connectivity of a graph is differently definable with respect to various parameters and therefore calculable using various formulas;

- density: the more the number of the edges of a graph approaches the maximum number of those mathematically possible given the number of vertices, the more it can be said that the graph is dense, meaning that it approaches the typical completeness of rhizomes. Inversely the graphs furthest from completeness are called sparse, that is with few edges. The density, like the connectivity, is defined and calculated in various ways depending on the selected parameters and the types of graphs.

2.4 Components of hypertexts

The relationship between graphs and the main components of hypertexts is well summed up by this definition by Jacob Nielsen (1990, 298):

Hypertext is non-sequential writing: a directed graph, where each node contains some amount of text or other information. The nodes are connected by directed links. In most hypertext systems, a node may have several out-going links, each of which is then associated with some smaller part of the node called an anchor. When users activate an anchor, they follow the associated link to its destination node, thus navigating the hypertext network. Users backtrack by following the links they have used in navigation in the reverse direction. Landmarks are nodes which are especially prominent in the network, for example by being directly accessibile from many (or all) other nodes.

Nodes. Hypertext nodes, also referred to as lexia (Landow 2006), unlike graph nodes, are not mere abstract points with no properties, but → documents (Buckland 1997; 2016), i.e. physical entities in which signs interpretable as information are coded (in analog or digital mode). These documents can vary from the point of view of their size (ranging from a word to a novel), of their structure (ranging from the monolithic ones to others articulated in various levels of subunits) and of the type and number of the media involved (text, static image, moving image, audio and all their possible combinations). Typical examples of hypertext nodes are the cards of HyperCard (see section 3.7), the World Wide Web pages (see section 3.8) and the entries in an encyclopedia or in a dictionary. The more a certain amount of information is decomposed into small (as long as they are also self-explanatory) and numerous nodes, the greater will be its granularity (see section 2.1), which will allow the reaggregation into a more articulate hypertext.

Landmarks. In a hypertext, nodes can all have the same importance and visibility, or there may be some particularly relevant that the author assumes will be visited more often than others by readers. These nodes “that the user knows very well and which are recognized easily are called landmarks” (Neumüller 2001, 127). Typical examples are website homepages, summaries, indexes and the title pages of both paper and digital books, computer desktop screens and DVD menus.

Anchors. An anchor is a fragment (generally rather small and preferably meaningful) of a node from which a direct link is directed generically towards another node or, more specifically, towards a particular fragment (which sometimes is also called itself an anchor) of another node or of the same node where the source anchor is located. If the nodes involved are totally or partially textual, both the source anchor (i.e. the tail of the link) and the target anchor (i.e. the head of the link) can be a word or a phrase, while if the nodes include also or only images, one of them can perform the anchor function. In books “footnotes are anchors which provide the necessary information to locate linked information. And the reader has the freedom to jump or not to the footnote. For this reason, hypertext has sometimes been called generalized footnote” (Fluckiger 1995, 262). In the digital environment, source anchors are often highlighted (for example with a particular color or with an underlining) and can be “activated” with a procedure (for example a mouse “click”) that leads the user to view the target anchor.

Anchor. An area within a the content of a node which is the source or destination of a link. The anchor may be the whole of the node content. Typically, clicking a mouse on an anchor area causes the link to be followed, leaving the anchor at the opposite end of the link displayed. Anchors tend to be highlighted in a special way (always, or when the mouse is over them), or represented by a special symbol. An anchor may, and often does, correspond to the whole node (also sometimes known as “span”, “region”, “button”, or “extent”) (CERN 1992).

Links and paths. Links (also called hyperlinks, especially in the digital environment) are what, in a hypertext, connects a pair of nodes or, more exactly, a pair of anchors to each other. Paths are continuous sequences of links that users follow during the browsing. In non-digital hypertexts, links and paths often have an exclusively symbolic or conceptual nature, in the sense that the reading of the source anchor (often not graphically highlighted in any special way in a paper environment) allows the user aware of the corresponding language code to understand that more relevant information is available in a “documentary location” (either internal or external according to the document that hosts the anchor) which can possibly be achieved by the user thanks to his/her own autonomous movement in the “documentary space”. For example, finding in the text of a scientific article a pair of brackets that contain a short string of text followed by a space and by four digits, readers could (if they are sufficiently educated, experienced and motivated) understand, in the order, that:

(a) that it is a surname followed by a date;

(b) by leafing through the pages of the article they will find at the end of the book an alphabetically arranged list of all the surnames+dates present in the text;

(c) by scrolling through that list until the desired couple surname+date is reached, they will find a few lines of text that, through a standardized coding not so obvious to understand, will provide them with the necessary information first to identify, then to locate and finally to possibly reach (on the shelf behind them or in a library at the other end of the world) the text on which the author of the article wanted to attract their attention for some reason.

The path that leads from the source anchor (surname+date) to the target node (the corresponding book or article) is in this case long, complex and entirely based on a series of decodings, decisions, actions and (often) physical movements all at the users’ expense. In the digital environment, instead, many of this decodings, decisions, actions and (virtual) movements are automatic and immediate, because the link takes the form of a series of instructions coded in the anchor and executed by the computer when the user decides to activate it. If the scientific article in the example were contained in an e-journal available on the World Wide Web, the surname+date anchor (visible to the user as a coloured and underlined text) could be associated to an instruction written in HTML language that, if activated by the touch of a finger on the mouse or on the touch screen, would order the computer that the user is using to connect through the Internet to another remote computer in which resides the document published in that date by the author with that surname, and to display it on its screen. Therefore the digital link performs the same functions as the paper one, but automating and fluidifying its passages, increasing that “ability to move without interruption from one information resource to another” (Feather and Sturges 2003, 232) that we have seen (see section 1) is so central to the definition of hypertextuality, because “true hypertext should […] make users feel that they can move freely through the information according to their own needs. This feeling is hard to define precisely but certainly implies short response times and low cognitive load when navigating” (Nielsen 1990, 298).

Links can be distinguished and classified from various points of view (DeRose 1989; Hammwöhner and Kuhlen 1994; Signore 1995; Agosti, Crestani and Melucci 1997; Miles-Board, Carr and Hall 2002; Léon and Maiocchi 2002, 64-68):

- they can be created simultaneously with the document that contains their source anchors or be added later by the same author of the document or from other people. Especially in the case of a subsequent addition, instead of being decided and constructed one by one on the basis of a series of independent evaluations, they can sometimes be created by a software on the basis of an algorithm or a criterion previously set, therefore configuring themselves as automated links (Agosti and Melucci 2000);

- a particular case of automatically created links are the intensional ones, which instead of being explicitly and permanently stored in the hypertext as the extensional ones (which lead to a predetermined node) are created each time during the navigation in the hypertext on the basis of predefined procedures and parameters. This method is applied, for example, within the so-called reference linking (see section 4.4) to provide the users of the bibliographic databases with always up-to-date and valid links, leading them precisely to documents that could have changed their location and that are available only to those who have certain access rights;

- the link connecting two nodes can be unidirectional or bi-directional, thus forming a couple of links connecting the nodes in both directions. In some cases (as in blog linkbacks, also called refbacks, trackbacks or pingbacks) the second link can be produced automatically;

- structural links serve to facilitate the orientation and the movement between the various parts of the architecture of a hypertext without reference to the specific semantic content of the individual nodes, which instead is at the basis of the associative links. The anchors from which the main structural links start are sometimes concentrated in a specific section present in each node of the hypertext, called the navigation bar;

- implicit (or embedded) links, predominantly associative, are those that start from an anchor placed in the central part of the node, corresponding to the actual document, while explicit links, predominantly structural, are those located in peripheral areas of the node, before, after or alongside the actual document (Rada 1991a, 37; Bernard, Hull and Drake 2001);

- typed links (De Young 1990, 240-241) make explicit (by means of a symbol, a colour or other graphic devices) the relationship between the source anchor or node and the target anchor or node (that is to say the reason why the author of the hypertext decided to create that link, see Bar-Ilan 2005) without having to activate the link to understand it. Differentiated typed links can lead, for example, to the explanation of a term, to a bibliographic reference, to the quotation of a text, to a sound or graphic content, to the suggestion of another semantically or formally similar node, to a particular section of the node itself, to the contextualization of the node within the overall architecture of the hypertext to which it belongs, etc. There is a lack of it in Wikipedia, where, if you click on an anchor, you never know if you will end up on a page that defines the meaning of that word in general or on one that talks about the object to which the page itself refers to in the context of the specific entry from which we started;

- weighted links (Yazdani and Popescu-Belis 2013) are associated to a number or a symbol representing the intensity of the connection between the source anchor or node and the target anchor or node.

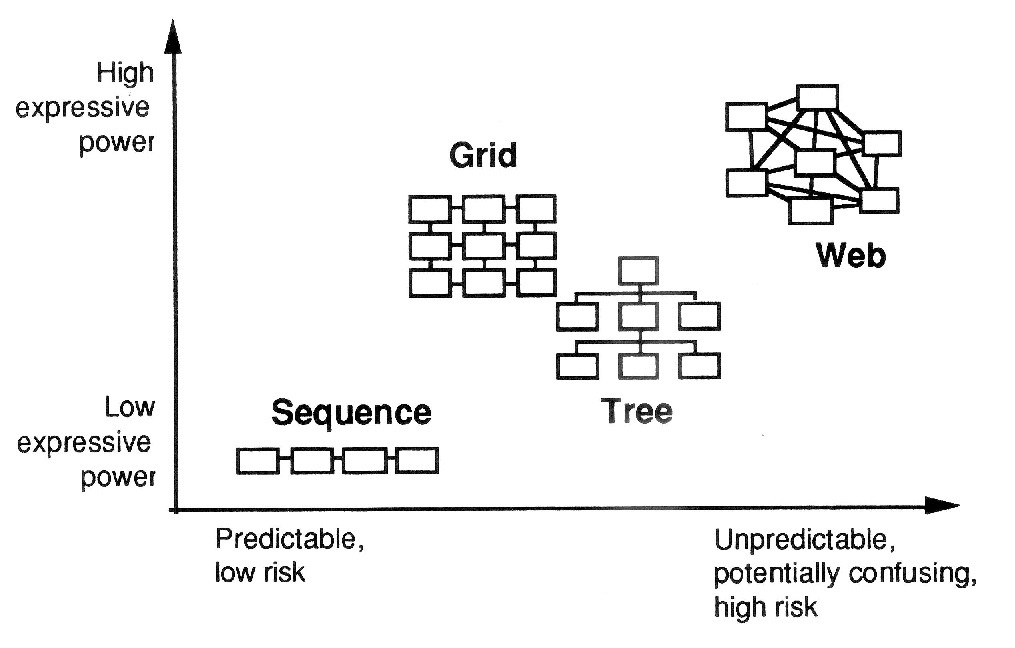

2.5 Typologies of hypertexts

From the foregoing it follows that the conceptual architecture of hypertexts is not opposed to such more classical organizational typologies of documents, information and knowledge as the unilinear list (Eco 2009), the hierarchical classificatory tree (Eco 2007, 13-96) — which is a particular case of graph theory trees — or the orthogonal grid typical of databases (corresponding to graph theory lattice). Sequence, hierarchy and grid can be considered simpler and more predictable types of hypertexts than an irregular and unpredictable structure such as the World Wide Web, that can however include inside also sites or their sections organized, just like sequences, hierarchies or grids. All the possible ways of connection among information units can be placed in some position within the conceptual field of hypertextuality.

The unilinear sequence is the simplest organizational structure to explore, but such simplicity is paid for by a low expressive and classificatory power while, at the other extreme, the high expressive and classificatory power of the radically hypertextual architectures is paid for by a low predictability and a high risk of getting lost during navigation (Brockmann, Horton and Brock 1989, 182-185).

2.6 Serendipity

In classical information retrieval, based on sequences, hierarchies and grids, one reaches the documents relevant with respect to a given purpose by means of subsequent refinements of the search, passing progressively from the general to the particular (or the reverse), without any significant increase in information during the process. One knows from the beginning what one is looking for, though maybe not what will be found (Lucarella 1990). In the more radical hypertexts instead (since the information is not only contained in the nodes, but also in the network, that is, in the structure of the links), by browsing one also discovers completely unknown information and may decide to abandon the designed search to undertake another one, according to the phenomenon of serendipity (Foster and Ford 2003; Merton and Barber 2004), typical of open shelf libraries.

According to Horace Walpole who coined the term [in 1754], serendipity has the following characteristics: first it involves unexpected, accidental discoveries made when looking for something else; second, it is a faculty or knack; third, these discoveries should occur at the right time. (Davies 1989, 274)

Serendipitous findings. One of the consequences of browsing in the library and through journals is finding something of interest or some things that are not originally sought. (Rice, McCreadie and Chang 2001, 182)

2.7 Browsing

In this article, the term browsing is used, consistently with the most common use in hypertext literature, as synonymous with navigating to indicate the process of shifting the user's attention from one node to another, proceeding along a path consisting of one or more links. This meaning is neutral with respect to the discussion between Marcia Bates and Birger Hjørland about the predominantly biological and behavioural (Bates 2007) or socio-cultural (Hjørland 2011) nature of the assumptions that guide the users during browsing.

In multilinear browsing, which is an expansion and enrichment of the unilinear scanning of lists and shelves, it is the search itself that creates the possibility of following new paths, reaching unexpected but (sometimes) relevant information content. There is less exhaustivity, but also greater creativity as compared to classic information retrieval and therefore it is an ideal search technique when one still does not know exactly what one wants to find (Lucarella 1990).

Hypertext's approach is to emphasize the semantic link structure of the web of text fragments, providing effecting means to traverse the web of nodes as well as present the contents of these nodes. Conventional information retrieval systems emphasize searching, whereas hypertext emphasizes browsing via link traversal. Therefore, hypertext is more suited to users that wish to discover information or who have ill-defined information goals, rather than specific goal-oriented searching. Hypertext is an effective form of information retrieval because information gained relates by analogy to the starting information, rather than to an explicit query. (Milne 1994, 26)

An ideal information system should allow both search methods (the “orthogonal” one of the classic information retrieval and the “transversal” one of hypertextual browsing), complementary to each other (Croft 1990; Lucarella 1990; Agosti and Smeaton 1996; Agosti and Melucci 2000; Brown 2002), allowing library users, for example, to identify the most useful documents through (Ridi 2007, 139-148):

- submitting to the system a query on the entire text of the documents or on their metadata divided into fields in order to extract, also thanks to the use of Boolean operators, a subset of indexed documents that best meets the user’s information needs and that can be further combined with other subsets;

- scanning lists of sorted metadata (possibly nested one inside the other to compose a classificatory hierarchy) to explore the entire content of the system from one end to the other, until one finds what one was looking for;

- hypertextual browsing in the metadata and the full-text of primary documents, performed by following single links “from node to node” or by activating anchors that allow access to lists of sorted metadata to scan or that send queries to the system.

2.8 Orientation in hypertexts

The main problem of large and complex hypertexts is the extreme ease with which the readers can lose orientation during the navigation, failing both to find what they are looking for and to understand what their position is in relation to the overall structure they are exploring (Waterworth and Chignell 1989; Satterfield 1992, 1-3; Landow 2006, 144-151). Another difficulty is the cognitive overhead necessary to decide how many and which links to follow in (or to add to) each node during the reading (or the writing) of a hypertext without losing sight of the initial or priority goal (Conklin 1987, 38-40; Ransom, Wu and Schmidt 1997).

To summarize, then, the problems with hypertext aredisorientation: the tendency to lose one’s sense of location and direction in a nonlinear document; and cognitive overhead: the additional effort and concentration necessary to maintain several tasks or trails at one time (Conklin 1987, 40).

To avoid both these problems, many solutions have been devised, some of which have already been mentioned in the preceding paragraphs (typed, structural and bidirectional links, navigation bars, landmarks) and others are listed below, but the most important (and the most difficult) one is a good writing and a coherent design, aware of the specific hypertext rhetoric [2]. We therefore limit ourselves to listing only some of the tools that can be useful to orientate oneself and to reduce the cognitive overhead within a hypertext (Nielsen 1990; Gay and Mazur 1991; Satterfield 1992; Nielsen 1995, 247-278; Neumüller 2001, 117-145), noting that a good part of the manuals about information architecture (Rosenfeld, Morville and Arango 2015), web usability (Krug 2014) and interfaces between humans and computers (Shneiderman et al. 2017) devote many of their pages to these tools.

Interfaces. Non-digital hypertexts generally do not need special devices to be used, or at least they need the same tools necessary to create, modify or use the non-digital documents endowed with an extremely low level of multilinearity. On the contrary, to be used on a computer or on another electronic device, digital hypertexts almost always need specific applications better equipped to handle nodes, links and multimediality than those normally used to create or use single texts, images, videos and sounds not structured in subunits nor connected to each other by links. These interfaces for digital hypertexts were often called browsers even before (Conklin 1987) the term began to indicate, more specifically, the software for navigating the World Wide Web. Their function is to display nodes (often using familiar metaphors such as pages, cards, frames, etc.) and links in a bi- or tridimensional space, translating the user's decision to follow a particular path into a corresponding (but more intuitive) movement in the documentary space (McKnight, Dillon and Richardson 1989; 1992; Woodhead 1991, 104-111; Reyes-Garcia and Bouhaï 2017). Moreover, they allow to manage the following orientation tools.

Backtracking. Various tools are collected under this name, which allow users to “go back to their steps” during the navigation in a hypertext (Nielsen 1990, 301-304; Neumüller 2001, 128-130), most of which are implemented also in almost all browsers that can be used to move around the World Wide Web. The back button allows to step backwards, that is to say to cover, in reverse, the last link followed, returning to the previous node. The chronology (or history list) is a list of the most recently visited nodes, arranged in reverse chronological order (Nielsen 1995, 252-254). Bookmarks are nodes that are stored (and possibly annotated and classified) by the user because they are considered to be particularly significant or because the user plans to return there in the future (Nielsen 1995, 254-257).

Maps. Hypertexts maps (De Young 1990, 240-241; Neumüller 2001, 126-127), as well as geographic ones, are simplified symbolic representations of a viable space that help users to locate their position in that space and to decide in which direction to move to reach the chosen destination. They are also called overviews (Nielsen 1995, 258-272), they can assume both a visual and a textual form and they can privilege, by highlighting them, different information contents, so there can be more maps for the same hypertext. Maps can be global, if they represent the entire hypertext to which they refer, or partial (i.e. local), and especially in the latter case they can sometimes also be contextual, that is to say they can present to the user the nodes surrounding the one where he/she is as if they were depicted by his/her point of view. Tables of contents of books, which list the chapters in the same order in which they actually follow one another, can be considered as global textual maps, while the Internet addresses (URLs), with their structure reflecting that of site directories, can be considered as local textual maps. Local maps can also take the form of representations of the path that the user has traced up to that moment, with the crossed nodes that can be reached directly from the map itself.

Indexes. When the informative contents of the nodes are represented by metadata that are not arranged in the same order as the nodes, but in a different one (for example alphabetical or classified), which facilitates the research, then it is usually preferred to encode them in textual form and we talk about indexes instead of maps or tables of contents. Classical examples are the subject indexes and the author indexes of books. Sometimes, especially in the digital environment, indexes, rather than appearing as simple lists, take the form of hierarchical trees or other articulated structures.

Breadcrumbs “provide a visual indicator that a particular node has been visited, anchor has been activated, or link has been traversed. […] Eventually, breadcrumbs accumulate to the point where they are marking most places; at this point their utility is minimal” (Keep, McLaughing and Parmar 2000) and so it would be good that the user could decide after how long they should disappear. Breadcrumbs and chronologies are sometimes associated with timestamps (Nielsen 1990, 302-303), which indicate the date and the time of the last visit or activation. In addition, breadcrumbs can be integrated with maps, appearing as footprints (Nielsen 1990, 303-304), which indicate on the maps, more or less permanently, the paths that have been followed.

Guided tours. The author of a particularly large and complex hypertext can decide to offer visitors, as an alternative to free browsing, one or more established paths that allow them to get a quick general idea of the whole document or to examine closely a particular theme or aspect, reducing the risks of being lost or neglecting the most important contents (Neumüller 2001, 124-125).

Social navigation. This denomination collects various methods (Neumüller 2001, 133-134) that use, in order to help users in choosing the direction to take, tips, comments or behaviours of other users or of professionals involved to help them, such as reference librarians. These suggestions can be explicit and “signed” (like an email from a friend who tells me the URL of a web page that he/she knows I might be interested in) or implicit and anonymous, like — in an online bookshop — the links that lead towards other books purchased by many other users who have bought the book I'm viewing.

Navigation by query. In many digital hypertexts it is possible to make a query that, sometimes also using Boolean operators, retrieves all the nodes (and/or all metadata associated with them) that contain one or more textual strings (Neumüller 2001, 134-137). The results of the query are proposed to the user sorted by specific metadata (such as the surnames of the authors of the books included in a library catalogue) or by a complex, variable — and often secret — algorithm based on many factors that tries to get a relevance ranking (like the one of Google and of similar search engines) (see section 4.3). An additional form of presenting the results that is being spread recently subdivides them automatically into clusters according to a series of pre-defined facets.

Go home anchors. When orientation is completely lost or when a new search is desired, it is very useful to have, in each node, an anchor that brings us home. However, the concept of home is not unique, so this anchor could activate a direct link to the main page of the hypertext that we are visiting (as in the navigation bars of the websites or in the DVD menus) or to an external node, which is our starting point for the exploration of any hypertext (as it happens by activating the “home” button of a web browser).

3. History

3.1 The word

Roy Rada (1991b, 659) relates the term hypertext to hyperbolic space, expression coined at the beginning of the eighteenth century and popularized by German mathematician Felix Klein at the end of the nineteenth century to describe a geometry with many dimensions. According to Rada (1991b, 659) “Ted Nelson coined the term hypertext in 1967 because he believed that text systems should reflect the hyperspace of concepts implicit in the text”. As a matter of fact, both the term hypertext and hypermedia (and the less fortunate hyperfilm) were already in the paper (Nelson 1965) presented by Theodor Holm Nelson (see section 3.5) at a conference held in Cleveland between 24 and 26 August 1965 and were probably conceived by Nelson himself in 1963 (Nelson 2001).

Let me introduce the word “hypertext” to mean a body of written or pictorial material interconnected in such a complex way that it could not conveniently be presented or represented on paper. It may contains summaries, or maps of its contents and their interrelations; it may contain annotations, additions and footnotes from scholars who have examined it. Let me suggest that such an object and system, properly designed and administered, could have great potential for education, increasing the student’s range of choices, his sense of freedom, his motivation, and his intellectual grasp. Such a system could grow indefinitely, gradually including more and more of the world’s written knowledge. (Nelson 1965, 96)

In any case it is clear that both Nelson and Genette — who, as mentioned in endnote 1, reinvented the term in 1982 attributing a different meaning to it, probably without knowing of Nelson’s sense (Laufer and Meyriat 1993, 315) — have used the pre-existing prefix hyper-, both English and French (coming from the Greek ὑπέρ- that means “over”, “above” or “beyond”, cognate with the Latin super- and the Proto-Germanic uber-), to indicate something that enriches or enhances a normal text.

3.2 Prehistory

Like many other natural and cultural phenomena, hypertexts existed long before someone defined the concept and gave them a name. Cross-references, indexes, notes and bibliographical citations in encyclopedic, juridical and scientific texts were already substantially hypertextual even in the centuries before 1965 (Finnemann 1999, 22-23; Rowberry 2015).

In practice, very few printed documents are designed for linear reading. Novels are a notable exception. Encyclopedias, dictionaries, reference manuals, magazines, newspaper, and even the present text, are conceptually hypertext documents. They need not to be read sequentially. Most of the links may be followed locally as they point to other parts of the book. But others, like bibliographic references, point to external information. (Fluckiger 1995, 262)

The compilation of Jewish Oral Law with its rabbinical commentaries (Talmud originally means “learning”), Indian epics and Greek mythology have often been named as the first hypertextual constructions. […] The standard printed Talmud page (spanning many centuries of Jewish religious scholarship) consists of the core texts, commentaries by various authors (most important Rashi’s Commentary), navigational aids (such as page number, tractate name, chapter number, chapter name) and glosses. Most of these glosses are emendations to the text, while others contain useful (or cryptic) cross-references. Often these comments were copied from the handwritten annotations that the authors inscribed in the margins of their personal copies of the Talmud. (Neumüller 2001, 62-63)

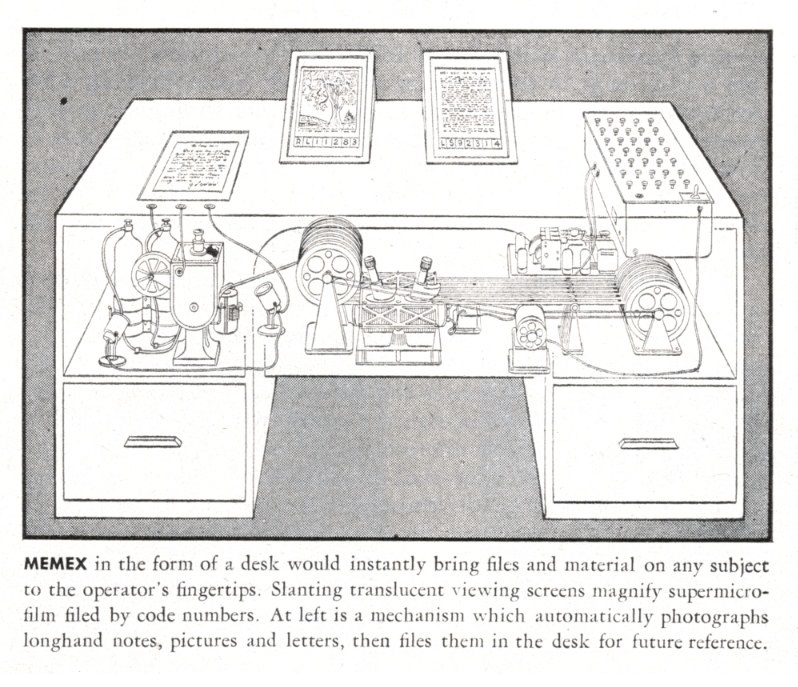

3.3 Memex

Even if W. Boyd Rayward (1994) found many aspects of hypertextuality in the theories and the achievements of the pioneer of documentation and information science (as well as cocreator of Universal Decimal Classification) Paul Otlet (1868-1944), all histories of modern (i.e. digital) hypertext begin with the Memex of Vannevar Bush (1890-1974), although it has never been made real nor did it envisage the use of either digital documents or computers (Nyce and Kahn 1991; Nielsen 1995, 33-36; Castellucci 2009, 99-120; Barnet 2013, 11-35).

Bush was an American engineer, director of the Office for Scientific Research and Development (an agency of the United States federal government active from 1941 to 1947 to coordinate scientific research for military purposes during World War II) that, right towards the end of the conflict, in the summer of 1945, published an article (Bush 1945) which is probably still the single most cited document in hypertext literature. In it Bush assumed the imminent buildability of a kind of well-equipped desk, called Memex (from “memory extender”), in which every scientist could store (on microfilms, by photographing them), annotate, connect, retrieve and read (by projecting them on small screens) all documents considered useful for his/her research.

The technology prefigured by Memex appeared futuristic at that time, but it turned out little far-sighted in reality, given the rapid development of digital computers that was beginning in those years. However, what turned out to be particularly significant with regard to the future development of hypertextuality was the principle proposed by Bush to connect together the information contents included in the archived documents.

Apart from the conventional form of indexing, Bush proposed «associative indexing, the basic idea of which is a provision whereby any item may be caused at will to select immediately and automatically another. This is the essential feature of Memex. The process of tying two items together is the important thing». Associative indexing would help to overcome our ineptitude in getting at a certain record which «is largely caused by the artificiality of systems of indexing. When data of any sort are placed in storage, they are filed alphabetically or numerically, and information is found (when it is) by tracing it down from subclass to subclass. […] The human mind does not work that way. It operates by association». (Neumüller 2001, 64-65, quoting Bush 1945)

3.4 NLS

For about fifteen years after the article of Bush (1945), while computers evolved from the 1945 ENIAC (in just one specimen) to the 1951 Ferranti Mark 1 (the first one available commercially, of which some tens specimens were sold) and the 1962 Atlas (one of the first “supercomputers”, as they were called at that time), there was no significant development either in the theory or in the realization of hypertexts, also because computers at that time were so huge and expensive that it was not conceivable to use them for functions other than pure calculation (Nielsen 1995, 36). In the early 1960s, however, two characters important for information science at least as much as Vannevar Bush (from whom they both declared to be strongly influenced) began to be interested in hypertexts (which no one named this way yet), more or less simultaneously but independently: Doug Engelbart e Ted Nelson.

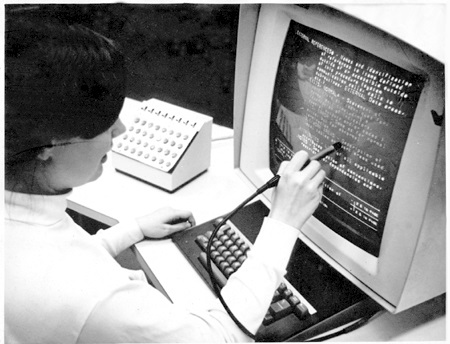

Douglas Carl Engelbart (1925-2013), best known as Doug Engelbart, was the American engineer who “more or less invented half the concepts of modern computing” (Nielsen 1995, 37), including the mouse, word processing, videoconferences and graphical user interfaces later made famous by Macintosh and Windows. Much of these concepts were developed before (1959-1960) for the US Air Force and later at the Stanford Research Institute and reached the stage of concrete pioneering achievements that were presented to the public in San Francisco on 9 December 1968 during what was later called “the mother of all demos” (Doug Engelbart Institute 2017), including one of the earliest connections between remote computers. The project of “augmenting human intellect”, illustrated for the first time by Engelbart (1962), included a system (already present in the 1968 presentation) for the collaborative management of textual documents called NLS (oN-Line System), which had various hypertextual characteristics, among which the ability to create cross-references between documents created or archived by different users (Lana 2004, 114-135; Barnet 2013, 37-64).

The database structure of NLS was primarily hierarchical but with facilities for creating non-hierarchical links. Many of the features of later hypertext systems can be found in NLS including a database of non-linear text, “view” filters to suppress detail and selected information for display, and “views” to structure the information displayed. (Ellis 1991, 7)

Despite these very innovative results, in 1977 the US government suspended funding to Engelbart, who continued his studies in the field thanks to investments by private companies, while “several people from Engelbart’s staff went to Xerox PARC and helped invent many of the second half of the concepts of modern computing” (Nielsen 1995, 37).

3.5 Xanadu

Theodor Holm Nelson (1937-), better known as Ted Nelson, a son of the well-known American film director Ralph Nelson and of the Broadway and Hollywood actress Celeste Holm, graduated in philosophy in 1959, got a master's degree in sociology in 1963 and started in 1960 (Castellucci 2009, 51-78; Barnet 2013, 65-89; Dechow and Struppa 2015) to design a hypertextual system named in 1967 Xanadu (after the name of the first capital of Yuan dynasty’s Chinese empire in the poem Kubla Khan by Samuel Taylor Coleridge, published in 1816) that was never fully realized, although it was a fundamental source of inspiration for the World Wide Web, as its own inventor Tim Berners-Lee (1989; 1999) acknowledged. As seen in section 3.1, it was during this project, at which Nelson continued to work — the OpenXanadu prototype was released in 2014 (Hern 2014) — that he coined the terms hypertext and hypermedia.

In the intentions of its creator, Xanadu should be a software that runs on a myriad of computers connected in a planetary network and that completely replaces any other kind of storage (even at home). Absolutely all documents (Nelson is also the inventor of the term docuverse, i.e. document universe), even the most ephemeral and personal one, would reside on Xanadu, protected from the gazes of others until the author decides to make them public, that is to say available on the entire network. From any document one could reach, through one or more passages, any other document, by following any kind of association. Creation and editing of documents would take place directly on the system, which would save every subsequent version of each document and would permit to cite any other document present in the network by simply opening a hypertextual window on it (Nelson 1990; Nielsen 1995; 37-39; Lana 2004, 139-158). “The system has no concept of deletion: once something is published, it is for the entire world to see forever. As links are created by users, the original document remains the same except for the fact that a newer version is created which would have references to the original version(s)” (Neumüller 2001, 66). Xanadu would therefore replace every word processor and every kind of publication, drawing together even more closely the very concepts of reading and writing.

Today, this description does not impress us very much, because it is not too different from the one of the World Wide Web that we use every day, but if we try to imagine reading it in the 1960s, in a world still without the Internet and personal computers, we understand why Nelson has often been called a “visionary”. After all, he himself was aware of the radicality, not only technological, of the project and of how it would reshape the entire world of information and communication.

What will happens to existing institutions is by no mean clear: libraries, the schools, publishers, advertising, broadcast networks, government, may all try to fight these developments; which could impede progress for a while, but not indefinitely. Or they may recognize in them the new shape of their proper work” (Nelson 1990, 0/12).

However, it may be useful to recall some of the main differences between Xanadu and WWW, often reported by Nelson himself, who summarized his criticisms thus: “ever-breaking links, links going outward only, quotes you can't follow to their origins, no version management, no rights management” (Nelson 1999).

- WWW links are generally unidirectional and extensional, so they usually do not update automatically and do not allow to go back on one’s steps nor to verify from which pages start the links directed to the page that is being viewed. Exceptions may occur only if the manager of the linked page reciprocates with an inverse link or if additional independent software is activated. On the contrary Xanadu links are always bidirectional and automatically updated.

- WWW pages often disappear into nothing, or, when updated, they make their previous contents disappear, overwritten by the new ones. Each different version of each Xanadu document is instead preserved and kept accessible forever.

- In the WWW there are numerous duplications of the same pages and of their informational contents, which make it difficult to distinguish the versions and to identify their chronology and relationships. On the contrary, in Xanadu, thanks to the transclusion method (another term coined by Nelson), information units are never duplicated, but they are displayed or included wherever they are useful but without compromising the uniqueness and priority of the original source.

- In the WWW the management of the right to access (for free or for a fee) to the informative contents is handled autonomously and independently by the respective managers with many different methods and criteria. On the contrary, in Xanadu to read or to quote a document one must pay a minimum amount directly to the holder of the corresponding copyright, through a unified system.

3.6 The first generation of hypertext systems

While NLS and Xanadu remained at prototype or project level, the primacy of being the first software, actually marketed, for the creation and management of hypertexts belongs to HES (Hypertext Editing System), developed between 1967 and 1969 at the Brown University by the American professor of computer science born in the Netherlands Andries van Dam (1937-) together with Ted Nelson. HES was funded by IBM, ran on an IBM 360/50 mainframe and was used, among others, by NASA to manage the documentation of Apollo project and by the editorial staffs of The New York Times and Time/Life (Nielsen 1995, 40; Barnet 2010). At the Brown University van Dam (this time without Nelson and after knowing Engelbart's work) also developed since 1968 another software running on IBM mainframes: FRESS (File Retrieval and Editing System), networked and multi-user (while HES was single-user), which was the first software to have a “undo” feature, the first text editor with no restrictions on text length, and the first hypertext system actually working that allowed bidirectional links and that provided maps to help orientation (Nielsen 1995, 40; Barnet 2010; 2013, 91-114).

After the exploits, in the 1960s, of precursors Engelbart, Nelson and van Dam, almost nothing significant happened in terms of hypertexts during the 1970s (Berk and Devlin 1991). Between 1970 and 1979, the LISA (Library and Information Science Abstracts) bibliographic database, queried in March 2017, recorded only one document (Schuegraf 1976) with the hyper* stem in the title, however not relevant as being related to “hyperbolic term distribution” in information retrieval [3]. Among the first generation software of that time, that is exclusively textual and managed on mainframe computers (Halasz 1988), we can mention at least ZOG (a pseudo-acronym with no special meaning), developed between 1972 and 1977 at Carnegie Mellon University, which was the first to adopt the “card” model later popularized by HyperCard (Nielsen 1995, 44).

3.7 The second generation of hypertext systems

The turning point for the fortune of digital hypertexts is 1983, not casually coinciding with the advent of personal computers (Ceruzzi 2012; Wikipedia 2017b), which had already appeared on the market in 1977 with the Apple II, the Commodore PET 2001 and the Tandy TRS-80 but which only at the beginning of the next decade began to spread massively with the IBM's PC (1981), the Commodore 64 (1982), the Sinclair’s ZX Spectrum (1982) and the Apple’s Macintosh (1984). In the mid-1980s the first hypertextual systems of second generation were created for the new home, school and small business market: they were multimedia, equipped with user friendly interfaces and usable on personal computers (Halasz 1988), of which we list here only the most important ones, in chronological order of availability (Nielsen 1995, 44-62; Neumüller 2001, 67-71).

- 1983: KMS (Knowledge Management System), a direct descendant of ZOG, which ran on Unix workstations, allowed to view only two nodes (called “frames”) at a time and provided a “home frame” directly accessible from all other frames.

- 1983: Hyperties, developed by Ben Shneiderman at the University of Maryland, running on PCs and on Sun workstations and providing anchors that could be activated either by clicking on them with a mouse or by using the arrow keys of the keyboard and that, before leading the user towards the target node, displayed a brief description of it within the source node.

- 1984: Guide, the first hypertext system available for Unix (from 1984), for Macintosh (from 1986), and for Windows (from 1987), originally designed by Peter Brown at the University of Kent, equipped with four different types of links.

- 1985: NoteCards (Halasz 1988; Ellis 1991), designed at Xerox PARC, particularly cited in the literature also because it has been well documented since the first stages of the project, running on Xerox and Sun computers, and whose nodes (called “notecards”) were rectangles of changeable sizes.

- 1985: Intermedia, developed at Brown University, which first allowed links to be directed not only towards entire nodes but also towards target anchors contained therein and which provided two types of maps for orientation: the “web view” produced by the system and the simplified maps created by users. Despite its excellent characteristics, Intermedia had little success and was abandoned in 1991 because it ran on the uncommon Unix version of Macintosh.

- 1987: Storyspace (Barnet 2013, 115-136), the first software specifically developed for creating and reading hypertext fiction, designed by Jay David Bolter and Michael Joyce and available for Macintosh and Windows (see section 5.1).

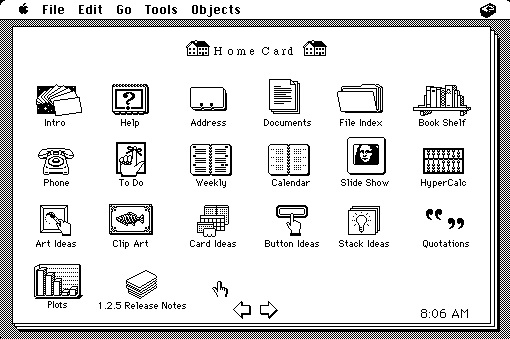

- 1987: HyperCard (Ellis 1991; Kinnell and Franklin 1992; Lasar 2012; LEM Staff 2014), designed by Bill Atkinson for Apple, which was probably the most widely used hypertextual software before the WWW, also thanks to its extremely powerful and intuitive programming language (HyperTalk) and because it was distributed free of charge with every Macintosh sold between 1987 and 1992 (and then commercialized, also for Windows, until 2004).

HyperCard is strongly based on the card metaphor. It is a frame-based system like KMS but mostly based on a much smaller frame size. Most HyperCard stacks are restricted to the size of the original small Macintosh screen even if the user has a larger screen. This is to make sure that all HyperCard designs will run on all Macintosh machines, thereby ensuring a reasonably wide distribution for Hypercard products. […] The basic node object in HyperCard is the card, and a collection of cards is called a stack. The main hypertext support is the ability to construct rectangular buttons on the screen and associate a HyperTalk program with them. This program will often just contain a single line of code written by the user in the form of a go to statement to achieve a hypertext jump. Buttons are normally activated when the user clicks on them, but one of the flexible aspects of HyperCard is that it allows actions to be taken also in the case of other events, such as when the cursor enters the rectangular region, or even when a specified time period has passed without any user activity. (Nielsen 1995, 58-59)

In November 1987, furthermore, the first international conference on hypertexts took place, organized by ACM (Association for Computing Machinery) at the University of North Carolina (DeAndrade and Simpson 1989). Between 1980 and 1989, the LISA database, queried in March 2017, recorded 102 documents with the stem hyper* in the title, all concentrated between July 1987 and December 1989, including the extensive, thorough and still much cited introduction written by Jeff Conklin (1987) for the journal Computer of IEEE (Institute of Electrical and Electronics Engineers) [4]. The term hypertext was added to the descriptors of the bibliographic databases LISA in September 1988 and Library Literature in June 1989 (Laufer and Meyriat 1993). In the spring of 1989 the first issue of the first international academic journal entirely dedicated to the topic was published: Hypermedia, become in 1995 The New Review of Hypermedia and Multimedia (Nielsen 1995, 62-66; Cunliffe and Tudhope 2010).

At the end of the 1980s, therefore, the concept, the term and the technology of hypertext were extremely popular and “fashionable” between both producers and users of computer products. There is therefore nothing surprising if a thirty-year-old physician in charge of designing a system to keep in order the documentation of the research institute for which he worked looked for inspiration for his project in this field, ending up with the wider, most used and most influential hypertextual system of all times.

3.8 World Wide Web

Tim Berners-Lee (1955-), born in London and son of two British mathematicians who met while working at the Ferranti Mark 1 computer (see section 3.4), received a first-class bachelor of arts degree in physics at Oxford in 1976. After working as an engineer, he spent in 1980 half an year as independent software consultant at CERN (European Organization for Nuclear Research) in Geneva, where he developed a card-based hypertext system called Enquire, with which he created a database of people and software applications that, however, was never used. Berners-Lee returned to CERN in 1984 with a fellowship (and, from 1987, as a staff member) to work on distributed real-time systems for scientific data acquisition and system control, re-elaborating Enquire to try to make it compatible with the Internet and with a plurality of databases and applications. The result was the incredibly fast timeline of the early years of the World Wide Web (Berners-Lee 1989; 1999; 2013; Gillies and Cailliau 2000; Connolly 2000; Castellucci 2009; Ryan 2010).

- 1989: In March TBL (Tim Berners-Lee) submitted to the management of CERN a proposal (Berners-Lee 1989) for the creation of a hypertextual system for managing internal documentation, of which no name was provided (not even the provisional one of the time, which was Mesh). Among the titles of the paragraphs there are some worthy of note: “Losing information at CERN”, “Linked information systems”, “The problem with trees”, “The problem with keywords” and “A solution: hypertext”. The term hypertext is attributed to Nelson, who would downright have “coined [it] in the 1950s”.

- 1990: In May TBL resubmitted his proposal, which had not yet been answered. In September TBL was licensed to buy a NeXT computer to work on the project, which, however, was not formally approved yet and was reformulated in November (Berners-Lee and Cailliau 1990) more operationally with Robert Cailliau, calling it for the first time WorldWideWeb (at that time without spaces). In November TBL created the first web server (nxoc01.cern.ch) on its NeXT and put the first web page on it, which could be viewed only by the two NeXT of CERN (including that of Cailliau) provided with the first web browser (graphical) created by himself, also called WorldWideWeb (but subsequently renamed Nexus to avoid confusion), and equipped with editing functions, thus being able to create, modify and display web pages. In December student Nicola Pellow, enrolled in the project, finished developing a second browser (textual), running on various operating systems different from the one of NeXT, thanks to which, connecting via telnet to CERN, the first web page had been potentially viewable since 20 December, from computers around the world connected to the Internet.

- 1991: In May a web server was activated on the central CERN machines (info.cern.ch). During the same year other web servers were activated in some European physics research centers and TBL started updating a subject index of web servers that would later become The WWW Virtual Library, that is to say the oldest WWW catalogue, still active today . On 6 August (a day that is often erroneously considered to be the date of the public availability of the first web server) TBL posted a short summary of the WWW project on the alt.hypertext newsgroup, making it known to the Internet users community. In October, the first mailing lists dedicated to WWW were created. In December, TBL and Cailliau held the first presentation of WWW outside CERN (in San Antonio, Texas, during the Hypertext'91 conference) and the first web server outside Europe (at Stanford University, California) was activated. By the end of the year there were around a dozen web servers in the world.

- 1992: Other browsers were developed inside and outside CERN, including Lynx (textual and still in use), ViolaWWW (graphical, for X Window) and Samba (graphical, the first for Macintosh). By the end of the year there were around thirty web servers in the world.

- 1993: In April CERN declared that it would give up any royalty from anyone who wanted to create servers, browsers or any other application for WWW, thus encouraging the spread of it just two months after the announcement, by the University of Minnesota, that the free implementation of servers of the competing Gopher system (more rigid and less multimedia) would no longer be possible. In June the first browser for Windows (Cello) and the first web robot (Wanderer, used to measure the size of the WWW) were available. Between June and November, Marc Andreessen gradually released for free download from the site of NCSA (National Centre for Supercomputing Applications) at the University of Illinois various versions of the first graphical browser available for many platforms that integrated text and images in a single window (the highly successful Mosaic, whose name was initially even used by neophytes as synonymous with WWW). In December important newspapers (The Economist, The Guardian, The New York Times) published articles on WWW and Mosaic and the first web search engine (JumpStation) was created. By the end of the year there were around six hundred web servers in the world.

- 1994: In March Marc Andreessen left NCSA and founded a company that in December began selling the direct heir of Mosaic: Netscape (that would remain the most widely used browser until it was overtaken by Microsoft Internet Explorer, in turn derived from Mosaic, in 1998). In May the first International WWW Conference was held at CERN. In July the Time magazine devoted its cover to the Internet, to a large extent due to the explosive success of the WWW. In September TBL left CERN and the following month he founded the W3C (World Wide Web Consortium) at MIT (Massachusetts Institute of Technology). By the end of the year there were about 2,500 web servers in the world, which would become about 23,500 in mid-1995, over 200,000 in mid-1996 (Margolis and Resnick 2000, 42) and more than six millions in February 2017 (Netcraft 2017).

Ironically, while Xanadu aspired to be the universal hypertext that would include all the documents in the world, but it never even approached that objective (even because it was designed in a time when the necessary technological preconditions were still lacking), the WWW, born with much smaller ambitions [5], became in fact, in a few years, the main environment used by humankind to exchange information and spread documents, comparable to printed paper for impact and dissemination. Among the causes of the success of the WWW, besides the fact that many of the organizations involved in the early stages of the project were at least partially publicly funded (and this allowed to make its results available free of charge), one should remember the compatibility with all types of software and format, as well as the choice to renounce more sophisticated but less universal functions.

The most important differences [between Xanadu and WWW] are the open systems nature of the WWW and its ability to be backwards compatible with legacy data. The WWW designers compromised and designed their system to work with the Internet through open standards with capabilities matching the kind of data that was available on the net at the time of the launch. These compromises ensured the success of the WWW but also hampered its ability to provide all the features one would ideally want in a hypertext system. (Nielsen 1995, 65)

An important part of this, discussed below, is the integration of a hypertext system with existing data, so as to provide a universal system, and to achieve critical usefulness at an early stage. (Berners-Lee 1989)

The priority always assigned by Berners-Lee to the concepts of openness, universality and inclusiveness is also witnessed by the fact that, rather than inventing yet another new information management system, he succeeded (somewhat like Johannes Gutenberg more than five centuries before) in making dialogue with each other numerous ideas and inventions already available separately, integrating them in view of a common purpose: computers, the Internet, markup languages, the client/server architecture, open standards, the concept of hypertext (not in its closed version of Enquire but in the open one of Xanadu) and the many ideas for the concrete development of digital hypertexts seen in the previous paragraphs. After all, Berners-Lee himself admitted it on several occasions:

I happened to come along with time, and the right interest and inclination, after hypertext and the Internet had come of age. The task left to me was to marry them together. (Berners-Lee 1999, p. 6)

Aspects of universality are present in all the constituent elements of the WWW (Salarelli 1997; W3C 2004) and contribute to making it the most open (see section 2.1) of all the hypertext systems ever made, that can be summarized like this:

Berners-Lee essentially created a system to give every page on a computer a standard address. This standard address is called the universal resource locator and is better known by its acronym URL. Each page is accessibile via the hypertext transfer protocol (HTTP), and the page is formatted with the hypertext markup language (HTML). Each page is visible using a web browser. (Kale 2016, 57)

More in detail, the main components of the WWW are the following.

Servers and clients. Even before the invention of the WWW, the Internet was organized with an architecture called client/server in which a certain number of more powerful computers (called hosts), constantly on, hosts some programmes called servers which provide data or other functionalities that can be used remotely using some programmes called clients running on other computers, typically less powerful and less expensive but much more numerous, that are turned on and off depending on the needs of their users. In everyday language, also the computers that host server type software are called servers and also the computers in which client type software are running are called clients. The HTTP (Hypertext Transfer Protocol) is the protocol (i.e. the set of rules) used by web servers (where the web pages are stored) and by web clients (i.e. by browsers) to dialogue with each other.

Pages and browsers. WWW nodes consist mainly of files (called pages) with .html or .htm extensions written or converted to HTML (Hypertext Markup Language) (W3C 2016a), which is a markup language derived from SGML (Standard Generalized Markup Language), an international standard developed between the 1960s and the 1980s (Goldfarb 2008) to allow the separation and distinction, in digital texts, of information content from the way in which such content is viewed by users. This is done thanks to some marks (i.e. tags) that identify certain sections of the text, such as a sentence or a word, indicating that they belong to a particular logical category (for example, that of the titles of primary importance), but without specifying how such belonging will be communicated to users. The task of making all titles of primary importance appear in the same way, distinguishing them both from plain text and from titles of secondary and tertiary importance, is delegated to the browser, which is the software for visualization of web pages (and sometimes also for their creation and editing, otherwise realizable with other software). Each browser interprets differently the instructions provided by HTML tags, but without making the tags visible to users. The difference in interpretation may be minimal (as happens using Internet Explorer or Google Chrome, because they both show the titles of primary importance with a bigger and thicker font, although with small graphical variations) or huge (as happens for example using an audio browser, which allows blind people to surf the WWW by transforming the texts into sounds and making different levels of headers with different voice tones or other sound signals). The two most known types of browsers are the graphical ones (currently the most popular ones, which also visualize images) and the textual ones (which only display texts, popular especially in the early years of the WWW). In order to ensure the accessibility to the WWW with all types of browsers, screens and software platforms, as well as to all users with reduced sensory capabilities, it is very important that pages are written in regular HTML — which in 2000 became an ISO (International Organization for Standardization) standard — without inventing tags interpretable only by some browsers, providing textual alternatives for visual and sound content and respecting the guidelines for accessibility developed and maintained by the W3C (W3C 2016b).

Anchors and links. HTML tags often work in pairs: one “start tag” tells the browser the beginning of the section that should be displayed in a certain way, while a corresponding “end tag” indicates the end of that section. However some tags are isolated, such as those that order the browser to display a line break or an image. The most relevant pair of tags for the hypertextuality of the WWW is the one that orders the browser to highlight (often underlining it and changing its colour) a certain word or sentence (or image, more difficult to be highlighted) and link it to another web page, or to a specific word, sentence of image contained in the same page or in any other web page reachable on the same computer or through the Internet. By clicking with the mouse (or anyway activating) such word, sentence or image (which is the source anchor), the browser stops displaying the start page (or its section) and displays the page (or its section) that is the target anchor of the link just followed. In order to allow the browser to understand where to go to look for the target anchor, each web server hosts a number of “addresses” that are articulated through directories and subdirectories up to indicate the specific URL (Uniform Resource Locator) of each web page and, if necessary, of specific points inside of it. This URL (which is a text string of type http://www.iskoi.org/doc/filosofia6.htm#1) is placed inside the pair of tags that transforms a word into a source anchor, following this syntax:

corresponding to an order given to the browser that could be translated into human language like this: “Dear browser, when I click with the mouse on the word ‘bibliography’, please display the web page<A HREF="http://www.iskoi.org/doc/filosofia6.htm#1">bibliography</A>

filosofia6.htm which resides on the server http://www.iskoi.org inside the directory doc and scroll down until you find the section identified by this pair of tags: <A NAME="1"></A>”.

Nodes that are not pages. One of the major innovations of the WWW compared to other hypertext systems is that it does not force users to use, for their documents, only one of the innumerable formats available for their encoding, or, yet worse, to produce or convert them all in yet another new format that can be used only within that particular system. On the contrary, the WWW is extremely hospitable and is almost like a hypertextual meta-system, with the ambition to become a unique platform for managing any possible digital document, but also with the humility of not imposing, for this purpose, its own unique format. This is possible because not all WWW nodes are pages written in HTML, but they can also consist of files encoded in any of the innumerable formats understandable by graphical browsers, such as JPG for images, MP3 for sounds, MP4 for movies and TXT or PDF for texts. Not all URLs, therefore, end with the name of a file with .html or .htm extension, but they can also end with a .pdf or .jpg extension. In addition, browsers can connect via the Internet not only to web servers (recognizable by URLs that start with http://) but also to most of the servers that use different (and often older) protocols compared to HTTP to provide online information and services, such as Telnet (that since 1969 has provided access to textual interfaces of remote hosts), FTP (that since 1971 has allowed to move files from clients to servers and viceversa) and Gopher, that since 1991 (although with little popularity after the mid-1990s) has offered an alternative more sober and less demanding (in terms of computing resources) than the WWW to organize online information resources, using hierarchical menus (Gihring 2016). Therefore these servers can be reached by following links that start from web pages and point to URLs that start with telnet://, ftp:// or gopher:// or, as with any other URL, by typing the full address in the specific window of the browser.

Sites and domains. A website is a set of web pages and other nodes that can be viewed with a browser linked between them in such a way as to form a coherent information system, typically (but not necessarily) all endowed with URLs that share the part that goes from http:// to the first following slash (so, for example: www.iskoi.org), called domain. The main page of each site is called homepage and it would be a good practice that every page of the site contained an anchor linked to it.

3.9 The third generation of hypertext systems