I S K O

Encyclopedia of Knowledge Organization

Citation indexing and indexes

by Paula Carina de Araújo, Renata Cristina Gutierres Castanha and Birger HjørlandTable of contents:

1. The idea of a citation database

2. The principles and design of citation indexes

3. Classifications of existing citation indexes

4. The Science Citation Index and other ISI/Clarivate Analytics citation indexes

4.1 Science Citation Index (SCI) / Science Citation Index Expanded (SCIE)

4.2 Social Sciences Citation Index (SSCI)

4.3 Arts & Humanities Citation Index (A&HCI)

4.4 Conference Proceedings Citation Index (CPCI)

4.5 Book Citation Index (BKCI)

4.6 Data Citation Index

4.7 Emerging Source Citation Index (ESCI)

5. Citation databases from other database producers

5.1 CiteSeerX

5.2 Crossref;

5.3 Scopus Citation Index (Scopus)

5.4 Google Scholar (GS)

5.5 Microsoft Academic (MA)

5.6 Dimensions

5.7 Other citation databases

6. Comparative studies of 6 of the major citation indexes

6.1 Coverage

6.2 Quality of indexed documents

6.3 Control over and reliability of the search

6.4 Search options and metadata

6.5 Conclusion of section

7. Predecessors to the Science Citation Index

7.1 Shepard’s Citations (Shepard’s)

8. Citations as subject access points (SAP)

9. Studies of citation behavior (citer motivations)

9.1 Citation theories

10. General conclusion

Acknowledgments

Endnotes

References

Appendix 1: Regional citation databases

App. 1.1: Chinese Science Citation Database (CSCD)

App. 1.2: Chinese Social Sciences Citation Index (CSSCI)

App. 1.3: Korean Journal Database (KCI)

App. 1.4: Scientific Electronic Library Online Citation Index (SciELO)

App. 1.5: Russian Science Citation Index (RSCI)

App. 1.6: Taiwan Citation Index — Humanities and Social Sciences (TCI-HSS)

App. 1.7: Citation Database for Japanese Papers (CJP)

App. 1.8: Islamic Citation Index

App. 1.9: MyCite (Malaysian Citation Index)

App. 1.10: ARCI (Arabic Citation Index)

App. 1.11: Indian Citation Index (ICI)

ColophonAbstract:

A citation index is a bibliographic database that provides citation links between documents. The first modern citation index was suggested by information scientist Eugene Garfield in 1955 and created by him in 1964, and it represents an important innovation to knowledge organization and information retrieval. This article describes citation indexes in general, considering the modern citation indexes, including Web of Science, Scopus, Google Scholar, Microsoft Academic, Crossref, Dimensions and some special citation indexes and predecessors to the modern citation index like Shepard’s Citations. We present comparative studies of the major ones and survey theoretical problems related to the role of citation indexes as subject access points, recognizing the implications to knowledge organization and information retrieval. Finally, studies on citation behavior are presented and the influence of citation indexes on knowledge organization, information retrieval and the scientific information ecosystem is recognized.

1. The idea of a citation database

Scientific and scholarly authors normally cite other publications. They do so by providing bibliographical references to other → documents in the text and elaborating them in a special “list of references” (as in this encyclopedia article) or in footnotes. (Such references are also often in the bibliometric literature termed “cited references”). When reference is made to another document, that document receives a citation. As expressed by Narin (1976, 334; 337): "a citation is the acknowledgement one bibliographic unit receives from another whereas a reference is the acknowledgement one unit gives to another" [1]. While references are made within documents, citations are received by other documents [2]. References contain a set of standardized information about the cited document which allows its identification (as, for example, the references in the present article) [3].

A citation → index is a paper-based or electronic database that provides citation links between documents. It may also be termed a reference index, but this term is seldom used [4], and in the following we use the established term: citation index [5].

It has always been possible to trace the references a given document makes to earlier documents (so-called backward searching). A citation index, however, makes it possible to trace the citations (if any) that a given document receives from later documents (so-called forward searching) [6], dependent of which documents has been indexed. Examples:

- Nyborg (2005) is an article about sex differences in general intelligence (g), that concluded: “Proper methodology identifies a male advantage in g that increases exponentially at higher levels, relates to brain size, and explains, at least in part, the universal male dominance in society”. If you would like to see whether this conclusion has been challenged or rejected by other researchers, you need only look up Nyborg’s paper up in a citation index [7].

- If you would like to see whether somebody has used your published ideas in their research, you may look yourself up in a citation index.

- If you would like to see whether a certain person or work is cited within a given field, you may look that person up and limit your search to that field. This way you may, for example, see which papers in library and information science have cited any work by Michel Foucault or the specific reference Garfield (1980).

No doubt, citation indexes are very important tools that have revolutionized the way we can search for information. This article focusses on the function of citation indexes to assist researchers identify useful and relevant research. Citation indexes are, however, increasingly used to evaluate research and researchers, and this function may influence how they are developing, and thus also their functionality for document searching.

2. The principles and design of citation indexes

In the words of Weinstock (1971, 16): “a citation index is a structured list of all the citations in a given collection of documents. Such lists are usually arranged so that the cited document is followed by the citing documents”. It is the scientist (or scholar) who creates the citations not the citation indexes as it has been claimed [8], and the role of citation indexes is to make the citations findable.

McVeigh (2017, 941) explains that “a true citation index has two aspects [or parts] — a defined source index and a standardized/unified cited reference index”. In Figure 1, on the left, two articles are shown. These articles are represented in the part of the citation index called the source index. For each article a long range of metadata is provided, including author names, title of article, title of journal, and the list of bibliographical references contained in the article. The source index is therefore a comprehensively described set of the indexed materials from which cited references will be compiled.

On the right in Figure 1 a list of references A-J, derived from the source index, are shown. These references represent the cited reference index, where each reference points back to the article in which it occurs (and back to the source index). A citation index is thus — in the words of McVeigh (2017, 941) “derived from a two-part indexing of source material. Bibliographic entries are created for each source item; cited references are captured into a separate index, where identical references are unified. The resulting two-part structure is the basic architecture of a citation index”.

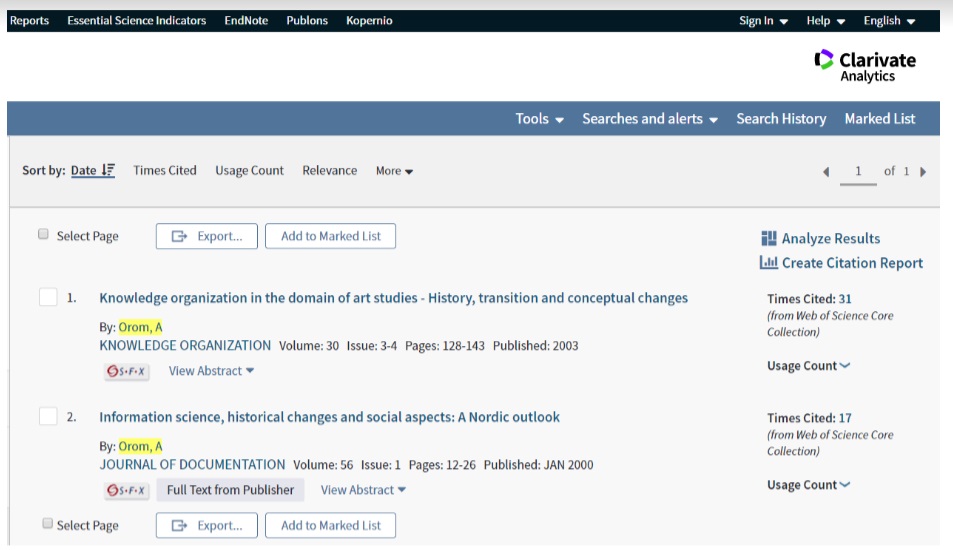

Figure 2 shows an example: Among Anders Ørom’s many publications two have been indexed by Web of Science, an article in Knowledge Organization, and another in Journal of Documentation. The figure also shows how many times each article has been cited (on July 8, 2019); the citing articles will be displayed by click on the number. However, as shown below not all citing articles have been captured (in the article in Knowledge Organization only the 31 citations which have been “unified” are included).

Ørom A* [9] as author in WoS, source index

If you would like to see which references are cited by Ørom (2003) (i.e., perform a backward search), you can get a copy of the article itself and see its list of references; there is also the possibility to select the full record in the source index (not shown) and here click at "cited references" [10].

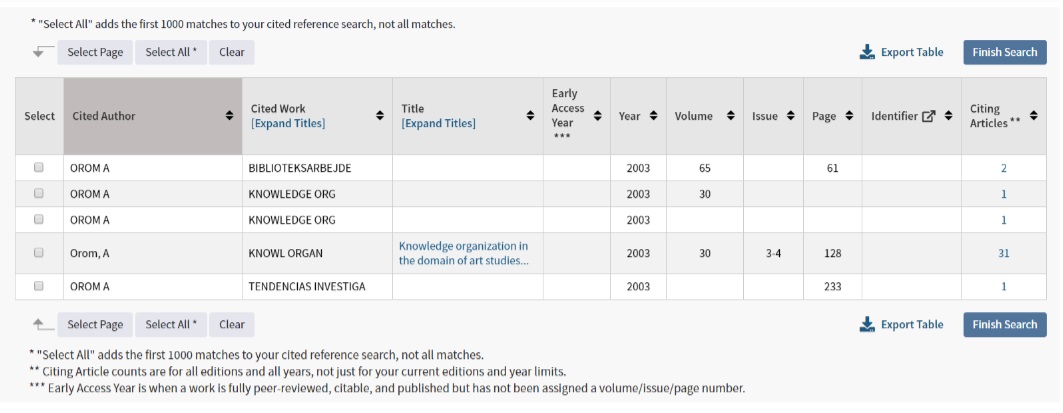

Figure 3 shows a corresponding example of forward searching from WoS: 5 references that matched the query “references citing Ørom (2003).

2003 in WoS, cited reference index

However, 3 of the 5 references in Figure 3 are to the same article in Knowledge Organization, in other words, there are 3 cited reference variants. This specific article has been cited 1+1+31 = 33 times (on July 8, 2019). One of the 3 reference variants provide the title of the paper, the issue and the starting page; also, in this reference the information that the article has 31 citing articles is given (the same 31 as in Figure 2). This means, that of the 33 citing references the 31 have been “unified”, but the unification algorithm has not been able to unify two of the variants. By selecting all three cited reference variants to the article in Knowledge Organization a list of all 33 citing references can be displayed. (This example is very simple with few cited reference variants, but often searches provide very many variants.) We see, that the unification makes the use of the citation index simpler [11]. McVeigh (2017, 941) writes that the data collected from those references are standardized to allow like citations to be collected, or unified (McVeigh 2017, 941). We saw in Figure 3 what is meant by unification of citations (and that some citations were not unified).

It is important to understand how citations are not being unified — and that the concepts unification and control in bibliographic databases are relative concepts. Contrary to typical library catalogs, for example, citation indexes do not provide standardized author names [12]. The Science Citation Index, for example, is based on a rather mechanical indexing of both metadata in the source index and references in the cited reference index based on the data as given by the source documents themselves. This means, that if an author sometimes uses two initials and sometimes only one initial, his writings are not unified (and both author searching and cited reference searching may be difficult). This is especially a problem when author have common names like A. Smith or when names are spelled in many ways in source documents, such as, for example the names of many Russian authors like Lev Vygotsky. (Compare the concept stray citations in Section 6.3.)

McVeigh (2017, 943) emphazises that a citation index is more than just a bibliographic resource with linked cited references. It is the structured, standardized data in the cited reference index, independently of the source index, that for her defines a citation index.

3. Classifications of existing citation databases

In this article citation indexes are presented in the following order:

- Section 4 presents the Science Citation Index and later ISI/Clarivate Analytics citation indexes in chronological order;

- Section 5 presents citation databases from other database producers (except regional databases) in chronological order;

- Regional citation databases (and databases in other languages than English) are presented in Appendix 1;

- Section 6 presents comparative studies of 6 major competing citation indexes: Crossref, Dimensions, Google Scholar (GS), Microsoft Academic (MA), Scopus and Web of Science (WoS);

- Predecessors to the Science Citation Index are presented in Section 7 (placed here because the interest in historical studies of former citation indexes was a response to the great interest that arose in the ground swell of Garfield’s citation indexes).

Citation indexes may also be classified as follows (see the endnotes for the specific titles in each category):

- By subject coverage: (a) universal citation databases [13]; (b) databases covering science [14]; (c) databases covering social sciences [15]; (d) citation databases covering the humanities [16]; (e) other subject specific citation databases [17].

- Citation databases covering specific document types: (a) books [18], (b) conference proceedings [19]; (c) data sets [20].

The most important databases are placed in separately numbered sections. For each database some standardized information is given (such as data of launching) together with presentation of relevant literature about that database. In the end of each description are links to the homepage of the database and list of journals or other sources covered (as bulled lists).

4. The Science Citation Index and other ISI/Clarivate Analytics citation indexes

The American government stimulated the development of scientific research soon after World War II. Considering the fast-growing volume of scientific literature and their concern regarded to the systems for information exchange capacity among scientists, the government sponsored many projects related to the improvement of methods for distributing and managing scientific information. Eugene Garfield was a member of the study team at Johns Hopkins Welch Medical Library sponsored by the Armed Forces Medical Library. Because of that experience “I [Garfield] became interested in whether and how machines could be used to generate indexing terms that effectively described the contents of a document, without the need for the intellectual judgments of human indexers” [21] (Garfield 1979, 6).

Garfield’s experience working in that project, his experience doing voluntary abstracting work for Chemical Abstracts and the fact he learned that there was an index to the case literature of the law that used citations (Shepard's Citations, see Section 7.1), led him to create the first modern citation index. He presented his idea of the citation index in Garfield (1955). Garfield's company, Institute for Scientific Information (ISI) was founded in 1960 in Philadelphia and in 1964 Garfield published the first Science Citation Index (SCI; see further on SCI in Section 4.1). ISI has shifted ownership and name many times and is today known as Clarivate Analytics [22]. This company has over the years created a suite of citation indexes to be presented below. Because of the many name shifts, it may be referred to by different names, such as ISI’s, Thomson Scientific’s or Clarivate Analytic’s citation indexes.

Web of Science (WoS) [23] is a platform created in 1997 consisting of databases designed to support scientific and scholarly research. It contains several databases, which can be searched together (but not all of them are citation indexes). They can be grouped as follows (see endnotes for lists of all databases in each group):

- (a) WoS Core Collection (e.g., SCI) [24].

- (b) Databases produced by ISI/Clarivate Analytic, but not included in the Core Collection (e.g., Data Citation Index) [25].

- (c) Other databases hosted by WoS and produced by other data providers (e.g., Russian Science Citation Index) [26].

The WoS platform can be considered a modernized version of the SCI. Its citation databases are further described below in the order of their launching.

4.1 Science Citation Index (SCI) / Science Citation Index Expanded (SCIE)

SCI was officially launched in 1964. SCIE, is — as the name indicates — a larger version of the SCI [27]. After its launching, the SCI, and most other citation indexes, have expanded retroactively, so that year of launching does not tell what years are searchable (SCI contains in the time of writing references back to 1898). The SCI was founded on some ideas and practical considerations that contrasted it with the major subject bibliographies at the time:

It was covering all scientific disciplines, not just one (or a set of related fields), and it therefore had to be more selective in its selection of journals (and other documents) to be covered. This was done by applying Bradford’s law of scattering, which Garfield (1971) modified to Garfield's law of concentration. Sugimoto and Larivière (2018, 25-26) emphasize that

Garfield never sought for the SCI to be an exhaustive database; rather, he aimed to curate a selective list of journals that were the most important for their respective disciplines. He used Bradford’s law of scattering as the core principle. This law dictates that the majority of cited literature in a domain is concentrated in a few journals and, conversely, that the majority of scholarly journals contain a minority of cited documents.

By implication a citation index needs only to index relatively few journals in order to display a majority of the references. Initially SCI covered 613 journals; today it indexes 9,046 journals showing data from 1898 to present with complete cited references (cf., Web of Science 2018). The journals are mainly selected by use of the journal impact factor (JIF), which is a bibliometric index which reflects the yearly average number of citations to recent articles published in a given journal. It is frequently used as a proxy for the relative importance of a journal within its field. It was devised by Eugene Garfield and is produced yearly by ISI/Clarivate Analytics; Scopus has developed an alternative called Scimago Journal Rank (SJR).- In contrast to the ordinary bibliographical databases, SCI did not use human indexers for individual articles (just for an overall classification of journals). It was based on information contained in the articles themselves (derived indexing), without any human assigned information, and it relied to a high degree of mechanical rather than intellectual indexing. It was assumed that searching the titles (translated to English) and the references/citation would be sufficient to make it an important tool. (Also, in contrast to the dominant documentation databases, SCI did not bring abstracts of the indexed articles at its start; however, abstracts taken from the articles were added from 1971, and thereby the difference between “abstract journals” and citation indexes was reduced.)

- Journals indexed in SCI were indexed “cover-to-cover”, not selectively. This removed another source of subjective choice in the indexing process, as users could rely on journals being fully indexed.

Braun, Glänzel and Schubert (2000) examined how different disciplines, countries and publishers are represented in the SCI by comparing the subset of journals indexed here with the number of journals covered by Ulrich's International Periodicals Directory from 1998. They found that (p. 254), in average, SCI covered 9.83% of the journals in Ulrich's, but some fields were overrepresented (physics 27.4%, chemistry 26.3%, mathematics 25.0%, biology 23.9%, pharmacy and pharmacology 15.0%, medical sciences 14.8%, engineering 14.5% and earth sciences 12.8%). 17 other fields were found to be underrepresented compared to the 9.83% (in decreasing order): environmental studies 9.4%, computers 8.9%, metallurgy 8.6%, energy 6.4%, public health and safety 5.9%, sciences: comprehensive works 5.0%, petroleum and gas 5.0%, agriculture 5.0%, food and food industries 4.7%, forests and forestry 4.4%, psychology 4.0%, aeronautics and space flight 3.6%, technology: comprehensive work 3.1%, geography 2.4%, gardening and horticulture 1.4%, transportation 0.6% and finally building and construction 0.4%.

See also:

- Web of Science Fact Book (Clarivate Analytics undated).

- Clarivate Analytics [2019]. Science Citation Index Expanded: Journal List.: http://mjl.clarivate.com/cgi-bin/jrnlst/jlresults.cgi?PC=D.

4.2 Social Sciences Citation Index (SSCI)

SSCI was established in 1973 and indexes according to Web of Science (2018) 3,300 journals showing data from 1900 to present with complete cited references. It is possible to search in an entire century of information in one place, across 55 disciplines of the social sciences.

While most physical science research papers are universal in their interest and published in international journals in English, much research from the social sciences tends to be of primary interest to readers from the authors’ country, and often it is published in a national language and in journals not processed for the SSCI (cf., Lewison and Roe 2013); these authors examined SSCI’s coverage of journals from different countries. They concluded that their results can only be regarded as rather approximate but that it is apparent that the shortfall in coverage is real and quite large, and biggest for Russia, Poland and Japan; somewhat smaller for Italy, Spain and Belgium; less again for the Scandinavian countries; and least for the Anglophone countries (Australia, Canada, the UK), as would be expected. Klein and Chiang (2004) found that there is evidence of bias of an ideological nature in SSCI coverage of journals. See also:

- SSCI website: http://mjl.clarivate.com/scope/scope_ssci/

- SSCI journal list: http://mjl.clarivate.com/publist_ssci.pdf

4.3 Arts & Humanities Citation Index (A&HCI)

The A&HCI was established in 1978. It demonstrated some ways in which the humanities differ from science and social sciences, for example in the use of many implicit citations which needs to be formalized by the indexing staff (Garfield 1980). It indexes today 1,815 journals showing data from 1975 to present with full cited references including implicit citations (citations to works found in the body text of articles and not included in the bibliography, e.g., works of art). Garfield (1977b) suggested — just before A&HCI was started — how this index might benefit the humanities.

It has long been felt that adequate coverage is more problematic in A&HCI compared to SCI and SSCI, and this was a major reason for the European Science Foundation to initiate the development of the European Reference Index for the Humanities (ERIH) [28]. Sivertsen and Larsen (2012) considered the lower degree of concentration in the literature of the social sciences and humanities (SSH) and concluded that the concentration is strong enough to make citation indexes feasible in these fields. See also:

- Wikipedia. "A&HCI" at: https://en.wikipedia.org/wiki/Arts_and_Humanities_Citation_Index

- A&HCI journal list: http://mjl.clarivate.com/cgi-bin/jrnlst/jlresults.cgi?PC=H

4.4 Conference Proceedings Citation Index (CPCI)

The CPCI was established in 2008. It was preceded by some conference proceeding indexes from ISI, which were not citation indexes (Garfield 1970; 1977a; 1978; 1981). CPCI indexes now 197,792 proceedings within two main sub-indexes: Conference Proceedings Citation Index: Science (CPCI-S) and Conference Proceedings Citation Index: Social Science & Humanities (CPCI-SSH) (Web of Science 2018). The proceedings selection process is described by Testa (2012). (There is at present no specific homepage for this database at Clarivate Analytics.)

4.5 Book Citation Index (BKCI)

BKCI was established 2011. According to Web of Science (2018) it currently indexes 94,066 books from 2005 to present. Clarivate Analytics (2018) [29] wrote:

For the coverage of Book Citation Index, each book is evaluated on a case by case basis. The focus is on scholarly, research-oriented books for product. Once a book is selected, both the chapters and the book itself will be indexed. The index page is the guide for the book, so if available, the contents of the index page and all the references will be included. If the book is selected, the full book is indexed. There is no selective coverage. This means that there is no selective indexing of only a few chapters of a selected book into Book Citation Index — without indexing the whole book in BKCI.Coverage of revised edition of a book in Book Citation Index

Revised edition is picked up only if there is new material to present. The revised version will be selected only if it presents new content. If the 2nd edition does not present 50% or more new content, it will not be selected.

The focus is for new content, never published in the products. In fact, the revised version may be less than 1 percent of coverage.

Leydesdorff and Felt (2012a; 2012b) are two versions of the same study of BKCI. It found that books contain many citing references but are relatively less cited, which may find its origin in the slower circulation of books than of journal articles and that the reading of books is time consuming. The introduction of BKCI “has provided a seamless interface to WoS”.

Torres-Salinas, Robinson-Garcia and López-Cózar (2012) analyzed different impact indicators referred to the scientific publishers included in the Book Citation Index for the Social Sciences and Humanities fields during 2006-2011. They constructed 'Book Publishers Citation Reports' and presented a total of 19 rankings according to the different disciplines in humanities, arts, social sciences and law with six indicators for scientific publishers.

Gorraiz, Purnell and Glänzel (2013) wrote that BKCI was launched primarily to assist researchers identify relevant research that was previously invisible to them because of the lack of significant book content in the WoS. The authors found that BKCI is a first step towards creating a reliable citation index for monographs, but that it is a very challenging issue because of the special requirements of this document type. Among the problems mentioned is, that books, in contrast to journal articles, seldom provide address information on authors. Therefore, in its current version (at the time of writing their article), the authors found that BKCI should not be used for bibliometric or evaluative purposes.

Torres-Salinas et al. (2013) used the BKCI to conduct analyses, that could have not been done without this new index. The authors constructed “heliocentric clockwise maps” for four areas (disciplines): arts & humanities, science, social sciences and engineering & technology. For each area citation average values for the dominant publishers are calculated and displayed. It was found, for example, that the area of engineering & technology is greatly unbalanced because one publisher, Springer, dominates the area accumulating approximately 62% of the total share, that is; 28,000 book chapters of the total of 40,000 belong to this publisher. Other fields may also be unbalanced but not to such extent. Torres-Salinas, Robinson-Garcia, Campanario and López-Cózar (2014) provided descriptive information about BKCI and found:

Humanities and social sciences comprise 30 per cent of the total share of this database. Most of the disciplines are covered by very few publishers mainly from the UK and USA (75.05 per cent of the books), in fact 33 publishers hold 90 per cent of the whole share. Regarding publisher impact, 80.5 per cent of the books and chapters remained uncited. Two serious errors were found in this database: the Book Citation Index does not retrieve all citations for books and chapters; and book citations do not include citations to their chapters.

Zuccala et al. (2018) studied the metadata assigned to monographs in BKCI and found that many ISBNs are missing for editions of the same work, in particular "emblematic" (original/first) editions. The authors wrote:

The purpose of including all ISBNs is to ensure that every physical manifestation of a monograph is recognized (e.g., print, paperback, hardcopy, e-print) and that each ISBN is indexed as part of the correct edition or expression. This, in turn, ensures that all monograph editions can clearly be identified as being part of the same intellectual contribution, or work. Thus, publication counts and citation counts would be more accurate in the BKCI, and new metric indicators could be calculated more effectively.

See also:

- BKCI website: http://wokinfo.com/products_tools/multidisciplinary/bookcitationindex/

- BKCI master book list: http://wokinfo.com/mbl/

4.6 Data Citation Index

The Data Citation Index was established in 2012 by Thomson Reuters as a point of access to quality research data from repositories across disciplines. As data citation practices increase over the years, the new citation index based on research data is available through the WoS from Clarivate Analytics. The Data Citation Index is a tool designed to be a source of data discovery for sciences, social sciences and arts and humanities. Data Citation Index evaluates and selects repositories considering the content, persistence, stability and searchability. Then, data is organized into three document types: repository, data study and data set. In this index, descriptive records are created for data objects and linked to literature articles in the Web of Science.

The Data Citation Index emerges at a time in which data sharing is becoming a hot issue. Many researchers find, however, that data sharing is time consuming and too little acknowledged by colleagues and funding bodies. They are not sure whether the practice of sharing data is worth it as they are time-consuming and are not acknowledged by colleagues and funding bodies. Therefore, Force and Robinson (2014) explain that Data Citation Index aims to solve four key researcher problems: (1) data access and discovery; (2) data citation; (3) lack of willingness to deposit and cite data; and (4) lack of recognition and credit.

Torres-Salinas, Martín-Martín and Fuentes-Gutiérrez (2014, 6) analyzed the coverage of the Data Citation Index considering disciplines, document types and repositories. Their study acknowledges that the Data Citation Index is heavily oriented towards the hard sciences. Furthermore, four repositories represent 75% of the database, even though there are a total of 29 repositories that contain at least 4000 records. The authors believe that the bias to hard sciences and the concentration on a few repositories is related to the data sharing practices that is relatively common in medicine, genetics biochemistry and molecular biology, for example.

A study presented in 2014 demonstrated that data citation practices are uncommon within the scientific community, since 88% of the data analyzed had received no citation. The authors can state that “data sharing practices are not common to all areas of scientific knowledge and only certain fields have developed an infrastructure that allows to use and share data” (Torres-Salinas, Martín-Martín and Fuentes-Gutiérrez 2014, 6). The pattern of citation also changes from one domain to another. “While in Science and Engineering & Technology citations are concentrated among datasets, in the Social Sciences and Arts & Humanities, citations are normally referred to data studies” (Torres-Salinas, Martín-Martín and Fuentes-Gutiérrez 2014, 6).

Data Citation Index is an initiative that “continues to build content and develop infrastructure in the interest of improving attribution for non-traditional research output and enabling data discoverability and access” (Force and Robinson 2014, 1048). It is a new tool that can help to argue with researchers about the importance of sharing their data in order to be cited. Furthermore, “encouraging data citation and facilitating connections between datasets and published literature, the resource elevates datasets to the status of citable and standardized research objects” (1048).

See also:

- Data Citation Index website: https://clarivate.com/products/web-of-science/web-science-form/data-citation-index/

- Data Citation Index master data repository list: https://clarivate.com/master-data-repository-list

4.7 Emerging Source Citation Index (ESCI)

ESCI was established in 2015. It is a database that according to Clarivate Analytics (2017) indexes 7,280 emerging journals (journals that are not yet considered to fulfill the requirements of SCI, SSCI and AHCI) from 2005 to present with complete cited references. “Journals in ESCI have passed an initial editorial evaluation and can continue to be considered for inclusion in products such as SCIE, SSCI, and AHCI, which have rigorous evaluation processes and selection criteria”. ESCI is also (in 2019) described as covering “new areas of research in evolving disciplines, as well as relevant interdisciplinary scholarly content across rapidly changing research fields”.

ESCI journals do not receive an impact factor but are evaluated regularly and those qualified will be transferred to the WoS and hence, will receive an impact factor.

Testa (2009) wrote:

As the global distribution of Web of Science expands into virtually every region on earth, the importance of regional scholarship to our emerging regional user community also grows. Our approach to regional scholarship effectively extends the scope of the Thomson Reuters Journal Selection Process beyond the collection of the great international journal literature: it now moves into the realm of the regional journal literature. Its renewed purpose is to identify, evaluate, and select those scholarly journals that target a regional rather than an international audience. Bringing the best of these regional titles into the Web of Science will illuminate regional studies that would otherwise not have been visible to the broader international community of researchers.

ESCI thus seems to break with the original idea of SCI to include journals based on their impact factors. Perhaps it can be understood as a response for broader coverage in relation to research evaluation — as well as to the increasing competition from other producers of citation indexes?

See also:

- ESCI website: http://info.clarivate.com/ESCI

- ESCI journal list: http://mjl.clarivate.com/cgi-bin/jrnlst/jlresults.cgi?PC=EX

5. Citation databases from other database producers

5.1 CiteSeerX

CiteSeerX (until 2006 called Cite Seer) is an autonomous and automatic citation indexing system introduced in 1997. It focusses primarily on the literature in computer and information science. CiteSeerX was an innovation from previous citation indexing systems because the indexing process is completely automatic. The citation index autonomously locates, parses, and indexes articles found on the World Wide Web. CiteSeerX was based on these features: actively acquiring new documents, automatic citation indexing, and automatic linking of citations and documents. CiteSeerX is hosted by the Pennsylvania State University since 2006 when it changed to its present name. See also:

- CiteSeerX website: https://citeseerx.ist.psu.edu/index

5.2 Crossref

Crossref (or CrossRef) was launched in early 2000 by the Publishers International Linking Association Inc. (PILA) as a cooperative effort among publishers to enable persistent cross-publisher citation linking in online academic journals by using the Digital Object Identifier (DOI) [30]. PILA is a non-profit organization that provides citation links for both open access journals and subscription journals for online publications by the contributing publishers (but access to the subscription journals via citation links depends, of course, on the user’s or his library’s subscription to the journals to which the links go).

Crossref citation data is made available on behalf of the Initiative for Open Citations (I4OC) [31], a project launched in 2017 to promote the unrestricted availability of scholarly citation data. (However, Elsevier, a major academic publisher, did not join the initiative.) Harzing (2019, 342) wrote: “the addition of open citation data in April 2017, making it possible to use Crossref for citation analysis through an API [application programming interface]. Since November 2017, Publish or Perish (Harzing 2007) has provided the option of searching for authors, journals and key words in Crossref.” See also:

- Crossref home page: https://www.crossref.org/

- Initiative for Open Citations (I4OC), https://i4oc.org/

5.3 Scopus Citation Index (Scopus)

Elsevier released Scopus in 2004. Jacso (2005, 1539) wrote about it:

Elsevier created Scopus by extracting records from its traditional indexing/abstracting databases, such as GEOBASE, BIOBASE, EMBASE, and enhanced them by cited references. This is a different approach from the one used for the citation index databases of ISI which were created from the grounds up with the cited references in the records (and in the focus of the whole project).

Sugimoto and Larivière (2018, 30) wrote about this: “The establishment of Scopus is often heralded as a successful case of vertical integration, in which the firm that creates the citation index also owns the material it is indexing”. The database presents itself as “the largest abstract and citation database of peer-reviewed literature: scientific journals, books and conference proceedings” [32]. One important aspect described by Sugimoto and Larivière (2018, 31-32) is that Scopus is much younger than WoS in terms of coverage. Although it contains records going back to 1823 it is only consistent in indexing from 1996 onwards. Therefore, even if is considered a high-quality source for contemporary analyses it is of inferior quality to WoS for historical analyses.

Comparing Scopus and WoS, Moed (2017, 200) reported a study from Leiden University’s Centre for Science and Technology Studies (2007): “Scopus is a genuine alternative to WoS”. This claim was supported by the following figures:

- Scopus tends to include all science journals covered by the WoS (since 1996)

- And Scopus contains some 40% more papers

- Scopus is larger and broader in terms of subject and geographical coverage

- Web of Science is more selective in terms of citation impact

In 2015 Scopus also added The Scopus Article Metrics module, which added altmetrics data on the usage, captures, mentions and citations of each document indexed in the database. Altmetrics are scholarly communication indicators based on the social Web [33]; a set of diverse metrics – for example, how many times a paper was shared in a social network like Twitter or, how many times it was saved in a reference manager like Mendeley (Souza 2015, 58). Those databases are not citation indexes but are using citation data.

The Scopus Article Metrics module includes new metrics based on four alternative metrics categories:

- Scholarly Activity: Downloads and posts in common research tools such as Mendeley and CiteULike

- Social Activity: Mentions characterized by rapid, brief engagement on platforms used by the public, such as Twitter, Facebook and Google

- Scholarly Commentary: Reviews, articles and blogs by experts and scholars, such as F1000 Prime, research blogs and Wikipedia

- Mass Media: Coverage of research output in the mass media (e.g., coverage in top tier media)

Other databases are also using altmetrics, including Dimensions (Section 5.6) — and more will probably do so in the future.

- Website: https://www.scopus.com

- Content coverage description: https://www.elsevier.com/__data/assets/pdf_file/0007/69451/0597-Scopus-Content-Coverage-Guide-US-LETTER-v4-HI-singles-no-ticks.pdf

- Journal list: https://www.scopus.com/sources.uri?zone=TopNavBar&origin=searchbasic

5.4 Google Scholar (GS)

GS was launched in November 2004 and was originally intended as a tool for researchers to find and retrieve the full text of documents; however, Sugimoto and Larivière (2018, 32) wrote that “few years after the introduction of the tool, bibliometric indicators were added to the online platform at the individual and journal level under the rubric Google Scholar Citation” [33]. As documented in Section 6 below, GS has a much broader coverage than other citation databases, a fact also emphasized by Moed (2017, 115):

Google Scholar does cover a large number of sources (journals, books, conference proceedings, disciplinary preprint archives or institutional repositories) that are not indexed in WoS or Scopus, and thus has a much wider coverage (e.g., Moed, Bar-Ilan and Halevi 2016); its surplus is especially relevant for young researchers.

In addition to online journals (both acquired by agreement with publishers [35] and by crawling webpages), GS also use Google’s own product Google Books to acquire citation information from books. Moed (2017, 207) summarized the attributes of GS in this way:

- Google Scholar is a powerful tool to search relevant literature

- It is also a fantastic tool to track one’s own citation impact

- It is up-to-date, and has a broad coverage

- Its online metrics features are poor

- Use in research evaluation requires data verification by assessed researchers themselves

GS itself has poor search facilities, but Anne-Will Harzing has developed the free software Publish or Perish (Harzing 2010; 2011) to gather data from Google Scholar and other citation databases.

Moed (2017), Sugimoto and Larivière (2018) and other researchers consider there are data quality issues regarded to GS because its mechanical way of covering sources are available on the Internet if its algorithm identify them as scholarly, which is based on certain characteristics, such as a reference list. By contrast, WoS and Scopus have an active content advisory board responsible for quality control. Moed (2017) also states that Google Scholar online metrics features are poor. The bibliometric aspect of the platform is limited to individual and journal-level metrics, as these are the only indicators it aggregates.

Also, very little information about its coverage and citations count has been provided by the producer (Kousha and Thelwall 2007; Gray et al. 2012; Sugimoto and Larivière 2018). These problems are also further addressed in Section 6.

- Website: https://scholar.google.com

5.5 Microsoft Academic (MA)

MA is a free public Web search engine for academic publications re-launched by Microsoft Research in 2016. It replaces the earlier Microsoft Academic Search (MAS), which ended development in 2012.

In her study on the coverage of MA, Harzing (2016, 1646) concludes that “only Google Scholar outperforms Microsoft Academic in terms of both publications and citations”. Hug, Ochsner and Brändle (2017) concluded that MA outperforms GS in terms of functionality, structure and richness of data as well as with regard to data retrieval and handling, but had reservations and pointed out that further studies are needed to assess the suitability of MA as a bibliometric tool. Further studies of MA include Harzing and Alakangas (2017a; 2017b), Hug and Brändle (2017) and Thelwall (2017; 2018a).

It was announced on May 4th, 2021 that Microsoft Research will continue to support the automated AI agents powering Microsoft Academic services through the end of calendar year 2021. They encourage existing Microsoft Academic users to begin transitioning to other equivalent services like Dimensions, CrossRef, Lens, Semantic Scholar and others (Microsoft Academic 2021). Microsoft Research considers that the MA research project has achieved its objective “to remove the data access barriers for our research colleagues, it is the right time to explore other opportunities to give back to communities outside of academia”. They are proud “to contribute to a culture of open exchange and a growing ecosystem of collaborators” (Microsoft Academic 2021).

- Website: https://academic.microsoft.com/home

5.6 Dimensions

Dimensions was launched by Digital Science in January 2018. The database is offered in three different versions, a free version (Dimensions) and two paid versions (Dimensions plus and Dimensions analytics) [36].

Orduña-Malea and Delao-López-Cózar (2018) described its history and functionality. The most significant attribute is perhaps that it indexes individual articles (based on, e.g., Australian and New Zealand Standard Research Classification).

Harzing (2019) examined how Dimensions covered her own scholarly output and found that 83 of 84 journal articles were included (only surpassed by Google Scholar and Microsoft Academic), 1 out of 4 books and 1 out of 25 book chapters. Her overall conclusion — based on the small sample — was that Dimensions is better than Scopus and the Web of Science but is beaten by Google Scholar and Microsoft Academic. Thelwall (2018b) examined its coverage of journal articles (but not for other document types) and suggested on that basis that Dimensions is a competitor to the Web of Science and Scopus for non-evaluative citation analyses and for supporting some types of formal research evaluations. However, because it is currently indirectly spammable through preprint servers (e.g., by uploading batches of low-quality content), in its current form it should not be used for bibliometrics-driven research evaluations.

5.7 Other citation databases

A growing number of standard bibliographic database providers are now integrating citation data into their databases. For example, beginning in 2001, the PsycInfo database published by the American Psychological Association started to include references appearing in journal articles, books, and book chapters, and has since then also begun to include references appearing in some records for earlier year.

- Homepage: https://www.apa.org/pubs/databases/psycinfo/cited-references

- Journal Coverage List: https://www.apa.org/pubs/databases/psycinfo/coverage

SciFinder is a product from Chemical Abstracts Service (CAS) that was launched as a client-based chemistry database in late 1997; its citation analysis features were added in 2004 and in 2008 a Web version was released. See further in Li et al. (2010).

- Homepage: https://www.cas.org/products/scifinder-n

BIOSIS Citation Index was created in 2010 and released on the Web of Knowledge. It combines the indexed life science coverage found in BIOSIS Previews (Biological Abstracts, Reports, Reviews, and Meetings) with the power of cited reference searching and indexes data from 1926 to the present.

Lens is an open facility for discovery, analysis, metrics and mapping of scholarly literature and patents. It started as the world's first free and open full text patent search capability in 1991, founded by Cambia. It offers discovery, analytics, and management tools, like patents, API & data facilities, and collection. It is an aggreagator of metadata combining three unique content sets and one management tool as a base offering: scholarly works, patents, PatSeq and collections. Jefferson et al. (2019) state that Lens uses "a 15 digit open and persistent identifier, LensID, to expose credible variants, sources and context of knowledge artifacts, such as scholarly works or patents, while maintaining provenance, and allowing aggregation, normalization, and quality-control of diverse metadata".

- Website: https://lens.org

6. Comparative studies of 6 major citation indexes

ISI and its successor Thomson ISI seem to have had a de facto monopoly on citation indexes from the early 1960s until about 2000 (disregarding Shepard’s Citations, cf., Section 7.1). Thereafter other citation indexes began to appear. As already stated, PsycInfo began adding citation information to its database in 2001. At that time, it was rather unthinkable that major competitors to the ISI databases should be developed because of the huge costs of establishing and managing databases with such a broad coverage. However, as we saw in Section 5, many surprising developments have taken place since then and today we have a range of competing citation indexes. This Section 6 provides information of the relative strengths and weaknesses of 6 of the most important ones: Crossref, Dimensions, Google Scholar (GS), Microsoft Academic (MA), Scopus and WoS.

The first eye catching difference of these databases is that WoS and Scopus are proprietary databases (with paywalls) while Crossref, GS, MA and Dimensions all are free search services without paywalls (open access). However, this article will examine which databases performs best disregarding the costs associated with their use. As expressed by Jacso (2005, 1538) “open access should not provide excuse for ill-conceived and poorly implemented search options, and for convoluted, and potentially misleading presentation of information” (if such should be the case). It should also be said that this section focusses on the 6 databases ability to track citations, whereas other issues, such as providing h-indices are not considered.

Another characteristic often used is to classify databases in “controlled” versus “non-controlled”. WoS and Scopus, for example, have been characterized as controlled (or even “highly controlled”) databases whereas GS is an uncontrolled (or low-controlled) database (e.g. by López-Cózar, Orduna-Malea and Martín-Martín 2019 and Halevi, Moed and Bar-Ilan 2017). However, as we saw in Section 2, the concept “control” (as that of “unification”) are relative concepts and it should therefore always be specified in what way databases are controlled, as all databases in different ways make use of algorithms and mechanical procedures. It is probably better to characterize Crossref, GS, MA and Dimensions as crawl-based databases. This distinction seems to be important for their functionalities. Öchsner (2013, 31-46), for example, found that Web of Science and Scopus offer quite similar functionalities and coverage and maintain their real own databases. WoS has always made their databases from physical or electronic access to published journals. Scopus could do similar as described in Section 5.3. The other four (and free) citation search services depend on information from publishers’ homepages and from other parts of the Web.

6.1 Coverage

Coverage may be described by the database itself or by bibliometric studies. Li et al. (2010) found that both Web of Science and Scopus missed some references from publications that they cover, while Google Scholar did not disclose which publications were indexed and the results are not downloadable, so it is difficult to determine which citations are missing. Also, Google Scholar included citations from websites and therefore one of its problem is the duplication of citing references.

Since the launching of Scopus and GS in 2004 there have been many studies comparing these databases with WoS, each other and other databases such as MEDLINE. As described by Martín-Martín et. al. (2018, 1161) these studies do not provide a clear view of the respective strengths of these databases:

A key issue is the ability of GS, WoS, and Scopus to find citations to documents, and the extent to which they index citations that the others cannot find. The results of prior studies are confusing, however, because they have examined different small (with one exception) sets of articles. […] For example, the number of citations that are unique to GS varies between 13% and 67%, with the differences probably being due to the study year or the document types or disciplines covered. The only multidisciplinary study (Moed, [Bar-Ilan and Halevi] 2016) checked articles in 12 journals from 6 subject areas, which is still a limited set.

The study by Martín-Martín et. al. (2018) is important for at least four reasons:

- It is rather new, which is important because the databases have added many references since their start; therefore, many former studies may provide obsolete results;

- It is the first large scale investigation;

- This study is the broadest with respect to subject fields examined (252 subject categories);

- This study reviews former studies and relate its own findings to the former ones.

The overall conclusion was that in all areas GS citation data is essentially a superset of WoS’s and Scopus’s, with substantial extra coverage. Martín-Martín et. al. (2018, 1175) wrote:

This study provides evidence that GS finds significantly more citations than the WoS Core Collection and Scopus across all subject areas. Nearly all citations found by WoS (95%) and Scopus (92%) were also found by GS, which found a substantial amount of unique citations that were not found by the other databases. In the Humanities, Literature & Arts, Social Sciences, and Business, Economics & Management, unique GS citations surpass 50% of all citations in the area.

And:

In conclusion, this study gives the first systematic evidence to confirm prior speculation (Harzing, 2013; Martín-Martín et al., 2018; Mingers & Lipitakis, 2010; Prins et al., 2016) that citation data in GS has reached a high level of comprehensiveness, because the gaps of coverage in GS found by the earliest studies that analysed GS data have now been filled.

This conclusion is reinforced by Gusenbauer (2019), who found: “Google Scholar’s size might have been underestimated so far by more than 50%. By our estimation Google Scholar, with 389 million records, is currently the most comprehensive academic search engine”.

Concerning coverage, given the limitations of any study, GS was clearly shown to be superior compared with WoS and Scopus. Before we consider other aspects than coverage, let us consider how other databases compare. At the time of writing, GS and MA are considered the two largest citation databases. Hug and Brändle (2017, 1569) concluded:

Our findings suggest that, with the exceptions discussed above, MA performs similarly to Scopus in terms of coverage and citations […]. With its rapid and ongoing development, MA is on the verge of becoming a bibliometric superpower […]. The present study and the studies of Harzing and Alakangas (2017a, b) provide initial evidence for the excellent performance of MA in terms of coverage and citations.

Hug and Brändle (2017) also provided the following figures: “MA is also progressing quickly in terms of coverage. According to the development team of MA, the database expanded from 83 million records in 2015 (Sinha et al. 2015) to 140 million in 2016 (Wade et al. 2016) and 168 million in early 2017 (A. Chen, personal communication, March 31, 2017). It is currently growing by 1.3 million records per month (Microsoft Academic 2017).” Again, these figures must be considered with reservations, and should be followed by independent studies. However, the current evidence suggests that MA has almost (but not fully) the same coverage as GS, and because both databases develop quickly, it is impossible to predict what will be the case just in the short-term future.

These are relative figures, but how much of the scholarly documents available on the Web do they cover? Our knowledge of this is limited and insecure. Moed (2017, 194) mentioned the following figures of the number of scientific/scholarly journals, and wrote “as Garfield has pointed out, perhaps the most critical issue is how one defines the concept of journal”.

Derek de Solla Price (1980) 40,000 Garfield (1979) 10,000 [37] Scopus: covers in 2014 about 19,000 Web of Science: covers in 2014 11,000 Ulrichsweb (6 Febr 2015): Active, academic/scholarly journals 111,770 (100%) Peer-reviewed 66,734 (60%) Available online 47,826 (43%) Open access 15,025 (13%) Included in JCR 10,916 (10%)

Another estimate is reported by Khabsa and Giles (2014) indicating that at least 114 million English-language scholarly documents are accessible on the Web, of which Google Scholar at that time had nearly 100 million.

Harzing (2019) compared how Crossref, Dimensions, Google Scholar, Microsoft Academic, Scopus and the Web of Science covered her own complete bibliography (84 journal articles, 4 books, 25 book chapters, 100+ conference papers, 200+ other publications, 2 software programs, in total 400+ papers). Of the journal articles Scopus found 79 and WoS 61; all the other databases found all 84 or 83 of the journal articles. Other document types displayed greater variation, but the main tendency is clear: Scopus and WoS retrieve fewer references than the rest, and for all document types no of the databases performed better than GS. If Scopus and WoS still have a role to play, it must be by other qualities than their coverage. We will therefore investigate such issues in the next subsections. Harzing’s conclusion (2019, 341) was:

Overall, this first small-scale study suggests that, when compared to Scopus and the Web of Science, Crossref and Dimensions have a similar or better coverage for both publications and citations, but a substantively lower coverage than Google Scholar and Microsoft Academic. If our findings can be confirmed by larger-scale studies, Crossref and Dimensions might serve as good alternatives to Scopus and the Web of Science for both literature reviews and citation analysis. However, Google Scholar and Microsoft Academic maintain their position as the most comprehensive free[38] sources for publication and citation data.

Concerning coverage, the speed of indexing is also a relevant factor (the coverage of the newest documents): how long does it take from publishing of a document till it has been indexed by a citation database? Clearly, GS and crawl-based databases are faster compared to WoS (which again is faster than databases depending on human indexing, such as PsycInfo).

6.2 Quality of indexed documents

We have seen that SCI/WoS based their selection mainly on journal impact factors (JIF) (and Garfield’s law of concentration), presuming that journals with a high JIF are the most important. In addition, it describes its own selection in this way [39]:

Since Clarivate Analytics is not a publisher, we are able to serve as an objective data provider. Web of Science Core Collection includes a carefully curated collection of the world’s most influential journals across all disciplines. And, because quality and quantity aren’t mutually exclusive, Clarivate Analytics has a dedicated team of experts who evaluate all publications using our rigorous selection process. The journal selection process is publisher neutral and applied consistently to all journals from our 3,300 publishing partners. With consistent and detailed selection criteria covering both quantitative and qualitative assessment, we select only the most relevant research from commercial, society, and open access publishers. Existing titles are constantly under review to ensure they maintain initial quality levels.

This quote contains a hidden criticism of Scopus (because Elsevier is both publisher of Scopus and of very many academic journals) and, in particular, of GS that is bypassing all the peer-reviewed journals’ quality filters. The question of quality selection is perhaps just a special case of the question whether bibliographical databases (or libraries, encyclopedias etc.) need quality criteria and a selection process to meet such criteria, as, for example, also claimed by MEDLINE [40]. Such ideas are challenged today, and not only by GS [41]. So, a question is whether, for example, Garfield’s law of concentration and other kinds of quality selection today is bypassed by attempts to increase coverage in citation indexes? WoS, of course, uses selection as an argument that users should prefer this database, but such claims need to be based in research. Probably we need both databases with quality control and databases with increased coverage. A study by Acharya et al. (2014) found, however, that important work is more and more published in non-elite journals. A selective citation index processing only journals with a higher citation impact could therefore miss important work. But such a claim contradicts dominant practices, e.g. to evaluate researchers by considering the quality level of the journals in which they publish.

Harzing and Alakangas (2016, 802) wrote:

Unlike the Web of Science and Scopus, Google Scholar doesn’t have a strong quality control process and simply crawls any information that is available on academic related websites. Although most of Google Scholar’s results come from publisher websites, its coverage does include low quality “publications” such as blogs or magazine articles.

Sugimoto and Larivière (2018, 32) shared this view: “Google Scholar often aggregates nonjournal articles—from PowerPoint presentations to book chapters, basically anything that bears the trappings of an academic document”. However, an anonymous reviewer wrote in this connection:

Cassidy [Sugimoto] and Larivière (2018) [claim …] that GS inflates citation counts with many worthless, non-scholarly citations. This is by now something of a myth. The faulty citations in GS tend to come from algorithmic misattributions and can be manually corrected. Both Cassidy [Sugimoto] and Larivière maintain GS pages, and I’d challenge them to point out citations they have received that are academically worthless, because the overwhelming majority of the citations Google lists come from perfectly respectable sources. (The same holds for any other researcher with a Google Scholar page.)

Hjørland (2019, Appendix 8) provides an example, that can illustrate the nature of citations provided by GS in addition to those provided by WoS. For the article Hjørland (1998), excluding self-citations, WoS found 21 citations and GS additional 35 citations. The additional citations were mostly university theses, conference proceedings and journals not covered by WoS. It confirms the anonymous reviewer’s claim that nothing that can formally be considered academically worthless was added by GS. It should be added, however, as found by Martín-Martín et. al. (2018, 1175) that the extra citations found by GS tend to be much less cited compared to citing sources that are also in WoS or Scopus.

A study by Gehanno, Rollin and Darmoni (2013) found that of 738 original studies included in their gold standard database all were found in Google Scholar. They wrote (p. 1):

The coverage of GS for the studies included in the systematic reviews is 100%. If the authors of the 29 systematic reviews had used only GS, no reference would have been missed. With some improvement in the research options, to increase its precision, GS could become the leading bibliographic database in medicine and could be used alone for systematic reviews.

The article further stated (p. 4):

This 100% coverage of GS can be seen as amazing, since no single database is supposed to be exhaustive, even for good quality studies. For example, the recall ratios of Medline for randomized control trials (RCTs) only stand between 35% and 56% [Türp, Schulte and Antes 2002; Hopewell et al. 2002]. Since GS accesses only 1 million of the some 15 million records at PubMed, how can our results be explained? In fact, through agreements with publishers, GS accesses the “invisible” or “deep” Web, that is, commercial Web sites the automated “spiders” used by search engines such as Google cannot access.

Findings such as these suggests that the databases using automatic acquisition methods based on agreement with the publisher’s challenge both citation databases like WoS and Scopus and traditional documentation databases like MEDLINE, which so far have been considered the gold standard for serious document retrieval. It should be said, however, that there are still open questions and that also Gehanno, Rollin, and Darmoni (2013) had reservations about their own study and found that GS requires improvement in the advanced search features to improve its precision [42].

6.3 Control over and reliability of the search

A third issue concerns the users’ control over the search process (related to the transparency of the databases) and the reliability of search. Hjørland (2015) argued for the importance of human decision-making during searches implying that information retrieval should be considered a learning process in which the genres and terminology of the domain is learned as well as the qualities of relevant databases. Many researchers consider, for example, lists of indexed journals and related information important for users. Such lists are produced by ‘classical’ databases and by WoS and Scopus, but not for GS, for example. Gusenbauer (2019, 199) wrote in this connection:

it remains unclear why Google Scholar does not report its size. Given the unstable nature of Google Scholar’s QHC [query hit count] it might be possible that Google itself either has difficulties accurately assessing its size or does not want to acknowledge that its size fluctuates significantly. Perhaps it is important to Google to convey to those searching for information that it offers a structured, reliable, and stable source of knowledge. If Google maintains its policy of offering no information, scientometric estimation will have to remain the sole source of information on its size.

Gusenbauer (2019, 197) also wrote:

The exact workings of Google Scholar’s database remain a mystery. While our results remained stable during the examination period, we verified the results a few months later and found considerable differences. Our findings of Google Scholar’s lack of stability and reliability of its reported QHC [query hit count] are in line with earlier research.

The article further stated (197; italics in original):

While some variation in QHCs seem to be commonplace among popular search engines, such as Bing or Google (Wilkinson and Thelwall 2013), it should not happen in the scientific context where study outcomes depend on the resources available in databases. Whenever QHC variations occur, the question remains whether they stem from actual variations in available records or mere counting errors by the search system. The former would be particularly problematic in the academic context where accuracy and replicability are important criteria. These problems seem to be shared only by search engines. We found that all of the bibliographic databases and aggregators we examined—EbscoHost, ProQuest, Scopus, and Web of Science—provide plausible QHC results. This is not surprising given these services access a stable and curated database over which they have extensive control.

Another drawback of using Google Scholar is the large number of duplicate papers retrieved by the citation index. These have been termed stray citations [43], where minor variations in referencing lead to duplicate records for the same paper (compare the presentation of unification in Section 2).

6.4 Search options and metadata

The 6 databases discussed in this section have very different search options, and for some it is necessary to use software such as Publish or Perish (Harzing 2007 and later updates), which also has to be evaluated. Unfortunately, it has become difficult to find exact descriptions of search options. We are missing “blue sheets” as originally developed by the database host Dialog [44].

Searchable fields in the 6 databases (based on Gusenbauer 2019, 179-182, the databases themselves and the cited sources; all are citation databases and therefore allow searching citing references):

- Crossref: “Since November 2017, Publish or Perish (Harzing 2007) has provided the option of searching for authors, journals and key words in Crossref” (Harzing 2019, 342).

- Dimensions: Keyword searching in: Full data, title and abstract or DOI (+ Abstract searching: copy in an abstract).

Provides article level subject classification!

Filters: Publication year, researcher, fields of research, publication type, source title, journal list and open access. - Google Scholar: GS uses search options known from Google [45]. You may search by author name, by article title and/or by keywords, scholarly documents that cite a particular article, and look at the citation context of an article [46].

Martín-Martín et al. (2018, 1175) found there is no reliable and scalable method to extract data from GS, and the metadata offered by the platform are still very limited. (Again, Publish or Perish and its user guide (Harzing 2007) may be helpful). - Microsoft Academic: MA applies “Semantic search”, which, however, does not in itself indicates what search options are available [47].

Harzing’s Publish or Perish User’s Manual, https://harzing.com/resources/publish-or-perish/manual, provides the following information: Authors, years, affiliations, study field, full journal title, full article title, all of the words/any of the words (title), does not have an exact phrase search, cannot search for keywords in abstract or full-text of articles.

Search filters: Content types (no document type classification), people, research areas, published date (last week, month or year). - Scopus: Author, first author, source title, article title, abstract, keywords, affiliation (name, city, country), funding (sponsor, acronym, number), language, ISSN, CODEN, DOI, references, conference, chemical name, ORCID, CAS, year, document type, access type.

Scopus has “author identifier” to disambiguate author names.

Since 2015 Scopus has the Scopus Metrics Module (see Section 5.3) - Web of Science: Topic [i.e., title + abstract + author keywords + keywords plus], title, author, author identifiers, group author, editor, publication name, DOI, year published, address, open access, highly cited, research domain (only journal level subject classification), language, research area (only journal level subject classification), funding agency, group/Corporate authors, document types. (WoS can search exact phrases [48] and has other advanced search futures). WoS has a search option “author search” to help disambiguate author names.

The WoS platform does not allow combinations of advanced searches and cited reference searches [49].

WoS and Scopus offer at present the most advanced search possibilities and probably should be preferred for advanced searching in many situations. The most important alternative functions seem to be (1) Google Scholar’s possibility for full-text searching (2) Dimensions’ subject classification of single documents (as opposed to journal level classification only). However, there are many unique features, strengths as well as serious weaknesses in the different databases and qualified search decisions must be based on professional knowledge of the characteristics of all the citation indexes.

6.5 Conclusion of section

The six databases differ much, not just in their total number of documents indexed and citations provided, but also which fields, document types and time spans are covered, and all of this is changing over time. It has up to now been the consensus that the databases supplement each other. As Rousseau, Egghe and Guns (2018, 152) wrote:

Many colleagues performed investigations comparing GS, Scopus and the WoS [among others]. Because of unique features related to each of these databases a general consensus, see e.g. (Meho and Yang 2006 [i.e. 2007]) for one of the earliest studies, is that the three databases complement each other.

Is this consensus still valid? Rousseau, Egghe and Guns (2018) do not answer this question explicitly but seem to agree. The research reviewed in this Section 6 indicates the same, although, as stated, the market is extremely dynamic and therefore needs to be monitored. GS almost always provides a larger total number of citations but have poor search possibilities and other problems, such as the lack of stability, e.g., that citations, once given, may later disappear. Such should not be the case, because citations are provided by publications, and a document, once published cannot cease to exist (either it never existed and thereby represents a bibliographic ghost, or its representation in the database has disappeared).

By now, the development in the market for citation indexes is extremely dynamic and therefore many conclusions drawn just a few years ago may be outdated. In addition, the relevant attributes are very complex, so that one should not just be careful to evaluate databases on single factors, such as the total number of references or citations they provide, but also consider the attributes of databases in relation to the specific task and domain for which it should be used. This Section 6 provided some general information considered useful as general background knowledge, but it cannot provide detailed information about all relevant properties of all databases.

7. Predecessors to the Science Citation Index

It was the SCI that made citation indexes central to information science; only retrospectively it has been considered important to look back and recognize the earlier citation indexes, and therefore, this section is placed after the others.

Garfield (1979, 7) acknowledges that “Shepard’s Citations is the oldest major citation index in existence; it was started in 1873” (see further below, Section 7.1). However, it has later been found that the history of citation indexes is even older. Shapiro (1992, abstract) wrote:

Historians of bibliometrics have neglected legal bibliometrics almost completely. Yet bibliometrics, citation indexing, and citation analysis all appear to have been practiced in the legal field long before they were introduced into scientific literature. Publication counts are found in legal writings as early as 1817. Citation indexing originated with “tables of cases cited,” which date at least as far back as 1743. A full-fledged citation index book was published in 1860. Two ambitious citation analyses of court decisions appeared in 1894 and 1895.

Weinberg (1997; 2004) traced the citation index further back in time: nearly two centuries before Shepard’s Citations, a legal citation index was embedded in the Talmud. Besides that, the citation index book Mafteah ha-Zohar was published in 1566, a Biblical citation index was printed in the prior decade; and a Hebrew citation index to a single book is dated 1511. All of them three centuries before the full-fledged citation index book indicated by Shapiro (1992) as published in 1860. By describing the Hebrew Citation Index, Weinberg (1997, 318) states that the citations discussed in her paper “are not to the works of individuals, but to anonymous classics, mainly the Bible and Talmud”. She affirms that prestige and promotion are not relevant factors, as they are in modern citation analysis and wrote (126): “many scientific indexing structures thought to have originated in the computer era were invented as much as a millennium earlier, in the domain of religion”.

7.1 Shepard’s Citations

This citation index started as lists in a series of books indexed to different jurisdictions in 1873 by Frank Shepard (1848–1902). The name Shepard's Citations, Inc. was adopted in 1951. In 1999 LexisNexis released an online version of the Shepard's Citation Service and the use of the print version is declining.

The verb shepardizing refers to the process of consulting Shepard's to see if a case has cited by later cases and, for example, been overturned, reaffirmed or questioned. It is also used informally by legal professionals to describe citations in general”. (See also the description on Wikipedia, retrieved 2019.) See further:

- Shepard’s Citations on LexisNexis: https://www.lexisnexis.com/en-us/products/lexis-advance/shepards.page

8. Citations as subject access points (SAP)

There is a strong connection between the information retrieval domain and subject access points (SAP) [50]. Two kinds of SAP should be distinguished for this discussion of citation indexes:

- Terms, either assigned to documents by indexers (e.g., descriptors from → thesauri) or derived from the document (e.g., words from titles, e.g., in so-called KWIC indexes or from the full text) (this is so-called traditional indexing or conventional indexing) and

- the use of bibliographical references as SAP (as used in citation databases).

Both terms and references may function as concept symbols as pointed out by Small (1978).

One purpose of research in this area is to shed light on the relative advantages and disadvantages of these two kinds of indexing and the SAP that they provide. About traditional indexing Garfield (1964, 144) stated that “the ideas expressed in a particular source article are reflected in the index headings used by some conventional indexing system. In that case, a display of the descriptors or subject headings assigned to that paper by the indexer constitutes a restatement of the subject matter of that paper in the indexer's terminology” [51]. Garfield (1979, 2-3) further wrote:

There also is a qualitative side to search effectiveness that revolves around how precisely and comprehensively an individual indexing statement describes the pertinent literature. The precision of the description is a matter of semantics, which poses a series of problems in a subject index. The basic problem is that word usages varies from person to person. It is patently impossible for an indexer, no matter how competent, to reconcile these personal differences well enough to choose a series of subject terms that will unfailingly communicate the complicated concepts in a scientific document to anyone who is searching for it.

Garfield here is writing about manual indexing, but in principle, also algorithmic indexing is a subjective process (cf., Hjørland 2011), therefore his statement can be generalized to cover both intellectual and → automatic forms of indexing. Garfield (1979, 3) wrote about bibliographical references as SAP in contrast to terms:

Citations, used as indexing statements, provide these lost measures of search simplicity, productivity, and efficiency by avoiding the semantic problems. […] In other words, the citation is a precise, unambiguous representation of a subject that requires no interpretation and is immune to changes in terminology.

We shall see below that research has painted a more complicated and nuanced picture about the relative strengths and weaknesses than those here quoted from Garfield. First, it should be recognized, that the problem of information retrieval is deeper than semantics. As Swanson concluded (1986, 114): “Any search function is necessarily no more than a conjecture and must remain so forever”. It is not just about the meaning of terms assigned to documents, but at the deepest level it is about which documents are relevant for a specific query. By implication both term indexing and citation indexing are no more than conjectures about what best facilitates retrieval.